Generative AI 101: The basics and how public cloud fits in

Yes, we know there’s lots of hype out there, and that you could fill your entire working week with articles and webinars on all things generative AI and ChatGPT.

But as we talk to more and more customers about going beyond dabbling and individuals playing around, there are clear trends in the questions they’re asking. So we:

- Sat down with Allan Chong, Risto Jäntti, Max Mikkelsen and Perttu Prusi from our Advanced Data Solutions team – who are all working on active gen AI projects

- Extracted insight from their (very large) brains to create a series of handy FAQ articles

This is the first in the series, which looks at gen AI adoption trends, the types of POC organisations are trying, tech strengths and weaknesses and the role of public cloud in deployments.

Let’s get started.

Adoption trends

There’s so much hype around generative AI. How is it actually different to the AI and machine learning tech that was already available?

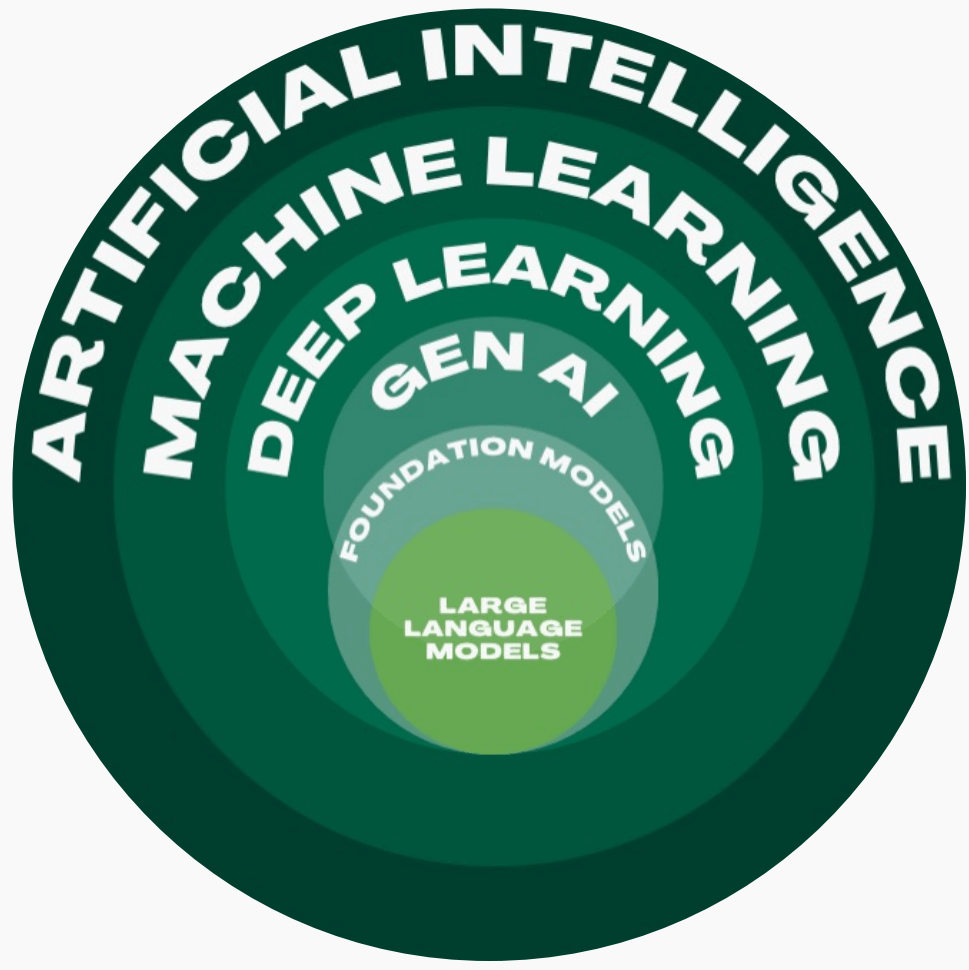

At a basic level, AI is the ability to mimic human intelligence, e.g., to understand, reason and learn. Here’s an overview of the difference between traditional AI and generative AI.

- Traditional AI involves programmes that can analyse content to make predictions and prescribe actions. It comprises analytics, machine learning. Example capabilities include being able to forecast revenue based on historical sales, dictate the next best offer to present to a customer and visually identify a product defect.

- Generative AI involves programmes that can generate net new content and better understand existing content. It comprises foundation models and large language models. Examples include creating an image from a prompt, summarising an article and answering questions from PDFs

In other words, generative AI is an advance in AI and ML technology that has come from an evolution of deep learning techniques, as well as increases in computing power and access to massive amounts of data. These advances mean it’s now possible to generate higher-quality content, more advanced AI models and human-like text in a way that wasn't possible with earlier AI and ML technologies.

In the past year or so, the combination of natural language capabilities, commoditised foundational models and tools from public cloud providers means generative AI has become more accessible (hence the hype). Basically, the focus has shifted from it being a data science-focused thing – which had a narrower audience – to more mass market.

How are foundation and large language models different from traditional AI models?

Traditional AI models are trained using a specific set of data to perform specific tasks.

Foundation models are trained on vast data sets to provide the ‘knowledge base’ for generative AI.

Large language models (LLMs) are foundation models built specifically for text generation. Essentially, they ‘predict’ the next word based on what it has observed so far. They use so-called transformers – deep learning architectures – to read text, spot patterns and make predictions. Then, they generate text in a human-like, conversational manner. You can dictate how formal or conversational the response is by setting what’s called the temperature – a high temperature gives you more creative outputs; a low temperature gives you more formal outputs.

There are many LLMs out there – you can access them through all the major public cloud providers via APIs.

What trends are you seeing in the generative AI use cases organisations are pursuing?

There are 5 main categories of use case we’re seeing:

- Summarisation

- What it is – Transforming text with domain-specific content into personalised overviews that capture key points

- Examples – Conversation summaries, meeting transcripts, contract information

- Inferring and classifying

- What it is – Analysing and extracting essential information from unstructured text

- Examples – Sorting customer complaints, threat and vulnerability classification, sentiment analysis, customer segmentation

- Extraction and transformation

- What it is – Extracting and transforming text into another form for consumption

- Examples – Universal translator, code translation, audit acceleration, SEC 10K fact extraction, user research findings

- Q&A

- What it is – Creating a Q&A feature where people can query specific content

- Examples – Semantic search on internal docs for the company intranet, customer service agent chatbot

- Content generation

- What it is – Generating various text content types for a specific purpose

- Examples – Creating job descriptions, generating code, planning meetings, writing social media posts and articles

All of these use cases are aimed at providing decision support, boosting efficiency and extracting the hidden potential of an organisation’s own data – whether it’s customer feedback, product data, contracts or employee resources.

In terms of planning a roadmap, how would you classify the different stages of generative AI adoption?

There are 7 basic phases. Each builds on the previous one to address the various challenges and opportunities that arise as you scale generative AI adoption.

Phase 1: Awareness and education

- Learn about generative AI technologies, their capabilities and potential use cases

- Understand the underlying concepts, such as generative adversarial networks (GANs), variational autoencoders (VAEs_ and transformers

- Keep up to date with the latest research and advancements

Phase 2: Evaluation and exploration

- Identify specific problems or opportunities where generative AI can add value in the organisation

- Evaluate generative AI tools, platforms and models

- Experiment with generative AI technologies through proof-of-concept (POC) projects and pilot programmes

Phase 3: Strategy and planning

- Outline goals, timelines and resource requirements for generative AI adoption

- Identify and evaluate the infrastructure (like hardware and software) you need

- Establish guidelines and processes for ethical considerations like data privacy, fairness and potential misuse

Stage 4: Implementation and integration

- Train (or recruit) the talent needed to develop and deploy generative AI solutions

- Build or adapt generative AI models based on the goals and requirements outlined in your strategy

- Integrate generative AI technologies into your existing workflows, systems and applications

Stage 5: Monitoring and optimisation

- Monitor the performance of generative AI solutions, ensuring they meet the desired objectives

- Continuously update and fine-tune the models based on new data or changing requirements

- Optimise the generative AI solutions to improve efficiency, scalability and effectiveness

Stage 6: Scaling and expansion

- Identify additional opportunities to leverage generative AI

- Scale up successful POCs to broader applications or larger datasets

- Share best practices and lessons learned to drive further adoption and innovation within the organisation

Stage 7: Continuous improvement and innovation

- Stay informed about the latest trends and advancements in generative AI

- Encourage a culture of innovation and continuous improvement within the organisation

- Collaborate with industry partners, academic institutions and the broader AI community to drive the development of new generative AI applications and solutions

Deploying OpenAI with Public Cloud: Dos & Don’ts.

When deploying OpenAI, you’ll need to consider things like security, scalability, performance, data quality and ethics.

Strengths and weaknesses

What is your realistic assessment of generative AI’s strengths and weaknesses?

The greatest strength is its ability to reduce time-to-market of natural language-related applications. And by that I mean applications that classify, summarise or extract information from existing text data or generate human-like answers to questions.

But ironically, this strength is also the caveat. When used unsupervised, LLMs can produce hallucinations, which are responses that ‘sound’ right but are actually lies. This is because they work by predicting what should come next in a sentence based on what’s come before and its knowledge of what could come next. It’s not drawing on facts.

For example, if you start with the phrase ‘once upon a time’ – there are infinite possibilities of what could finish the sentence. The LLM simply chooses one based on what data has been fed into it and the prompt you give it. Hallucinations can cause confusion and misunderstandings – and can also be damaging. You may have heard about this instance in a US court, where a lawyer used ChatGPT to generate a legal brief and ended up citing fake cases the tool had hallucinated.

Therefore, all outputs need human validation. So while playing around with generative AI can get people interested and show its potential, it doesn’t reveal (or address) the ethical, moral and quality-related complexities.

Also, LLMs aren’t suitable for quantitative analysis because they can’t do maths – they’re text focused. So you can’t ask ‘What offers the best return on investment?’ or ‘What’s the total cost of X, Y and Z?’ You need traditional AI models for quantitative analysis.

OpenAI has been getting the most media attention because of ChatGPT. What are the pros and cons of OpenAI in particular?

In summary, OpenAI has made significant advancements in AI research and development, producing powerful models with impressive language capabilities. However, there are concerns around accessibility, potential misuse, bias and computational requirements, as well as limitations in understanding. These areas need further R&D.

Here’s a bit more of a breakdown:

Pros:

- Advanced models – OpenAI has developed some of the most advanced AI models, (like GPT-3), which demonstrate impressive natural language understanding and generation capabilities

- Large-scale training data – OpenAI's models are trained on vast amounts of data, allowing them to capture complex patterns and generate contextually relevant responses. For context, GPT-3.5 was trained on 175 billion parameters, and GPT-4 is rumoured to have 1.76 trillion parameters.

- Active research – OpenAI is actively involved in AI research, pushing the boundaries of what AI can do and regularly publishing research papers to share their findings with the broader community

- Collaborative partnerships – They’ve partnered with several organisations to expand the reach and applicability of their AI models, driving innovation and adoption across various industries. For example, they partnered with Microsoft so you can access ChatGPT via Azure OpenAI Studio

- Long-term focus – OpenAI's mission is focused on ensuring that artificial general intelligence (AGI) benefits all of humanity, which drives their R&D efforts towards creating safe and broadly accessible AI technologies

Cons:

- Limited accessibility –OpenAI's most advanced models, like GPT-3, currently aren’t available for free, with access provided through an API with usage limitations. This can restrict experimentation and adoption for individual developers or smaller organisations

- Potential misuse – Their powerful AI models can potentially be misused to generate misleading or harmful content, such as deepfakes or disinformation

- Bias and fairness – Like many AI models, OpenAI's can inherit biases present in the data they’re trained on, leading to potentially unfair or biased outputs

- Computational resources – Training advanced AI models like GPT-3 requires massive computational resources, which can be expensive and have environmental concerns due to energy consumption

- Incomplete understanding – Despite their impressive capabilities, OpenAI's models, like GPT-3, still lack true understanding and reasoning abilities. This can lead to incorrect or nonsensical outputs in certain situations

Breaking through the data wall and framing your data strategy.

Watch videoThe role of public cloud in successful generative AI use

What are the advantages of using public cloud for generative AI?

Developing your own models takes significant time and resource. By using the foundational models that are immediately available and continuously developed through public cloud providers, you immediately remove these costs and reduce time-to-market.

A year ago, if you wanted to use a generative AI solution, you had to hire a data scientist and develop the models. Now, we’re advising that most customers stop investing in building their own models and use the best of what available from the existing models and rapidly-evolving public cloud tools. This is because we:

- Know how models behave

- Know the tools as they evolve in the cloud

- Can more quickly develop solutions, accelerating the advantages and value to the organisation

The same goes for the tools required to build applications using generative AI and LLMs.

What are the disadvantages of using public cloud?

Depending on your sector and regulatory environment, if you’re looking to use generative AI with data that’s at a high classification level, you may not be able to store it in public cloud. Similarly, in certain domains, you may need to develop your own models for security reasons.

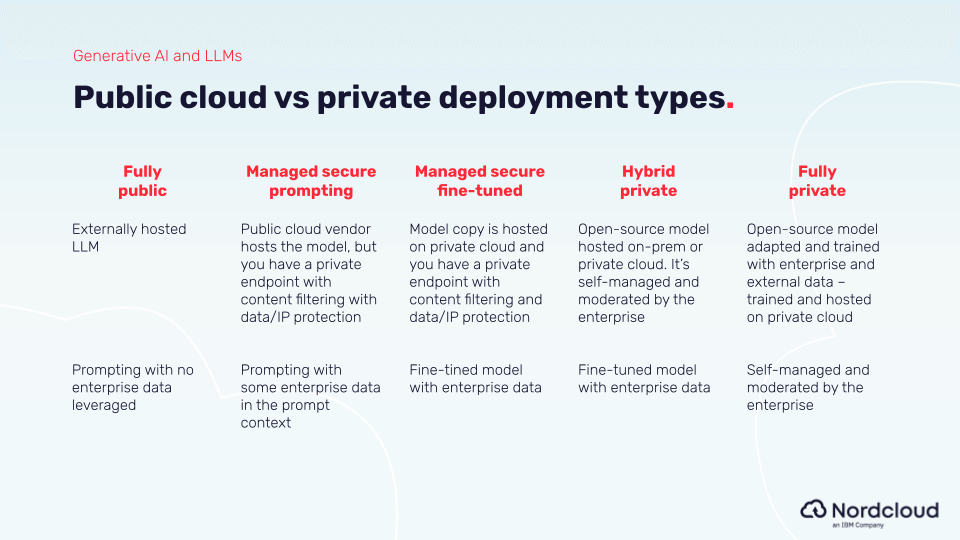

It’s worth noting that there’s a scale of how public/private you can go with your deployment, so it’s not a binary choice between private and public:

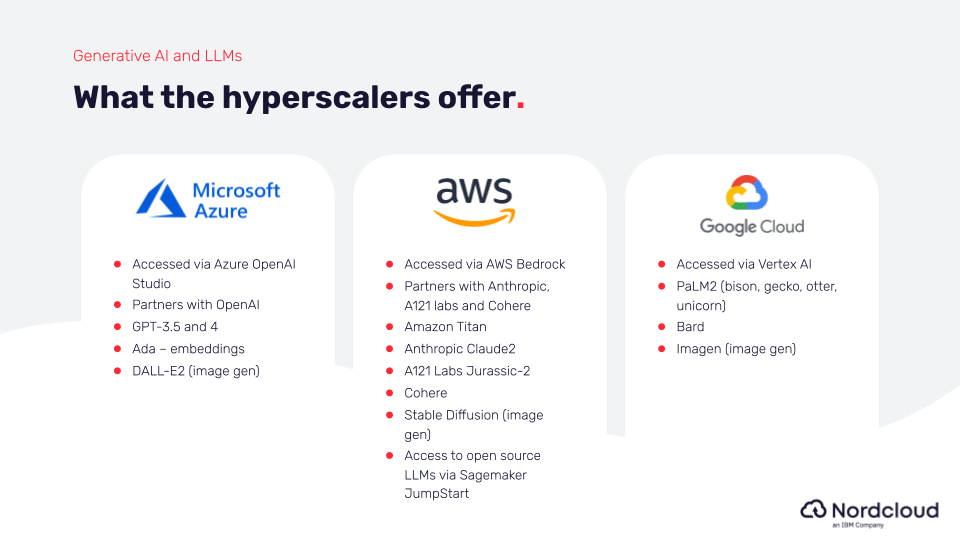

How do you leverage generative AI in Azure, AWS and Google Cloud?

All 3 hyperscalers offer access to generative AI tools (for text and image generation) via APIs. Here’s a summary:

Congrats on completing your first ‘module’!

Become even more of an expert by moving on to the next handy overviews in our Generative AI Q&A series, covering topics like getting started, deciding on POCs, scaling up and managing risks.

Keep an eye out on our Content Hub and LinkedIn for Module 2.

Get in Touch.

Let’s discuss how we can help with your cloud journey. Our experts are standing by to talk about your migration, modernisation, development and skills challenges.