Deploying OpenAI with Public Cloud: Dos & Don’ts

Generative AI is here. And the public cloud providers have unsurprisingly been investing heavily in all things AI. AWS, Microsoft Azure and Google Cloud have all recognised the importance of providing new capabilities, taking steps to support and facilitate the use of AI on their platforms.

In simple terms, all three cloud hyperscalers offer services and resources that make it easier to work with AI and build AI models.

They provide tools and pre-configured setups to speed up integration and make AI projects more efficient.

They also offer services for data storage, data processing, and scalable computing resources, which are essential for handling the large-scale data and computational requirements of AI.

This is really great news for enterprises, lowering the barrier for teams to integrate and build internal and customer-facing solutions, leveraging AI.

So, what if you’re considering getting stuck into these new possibilities?

You’ll need to ensure you have a well-structured and efficient cloud setup to utilise AI effectively, while factoring in important things such as security, scalability, performance, data quality, and ethics.

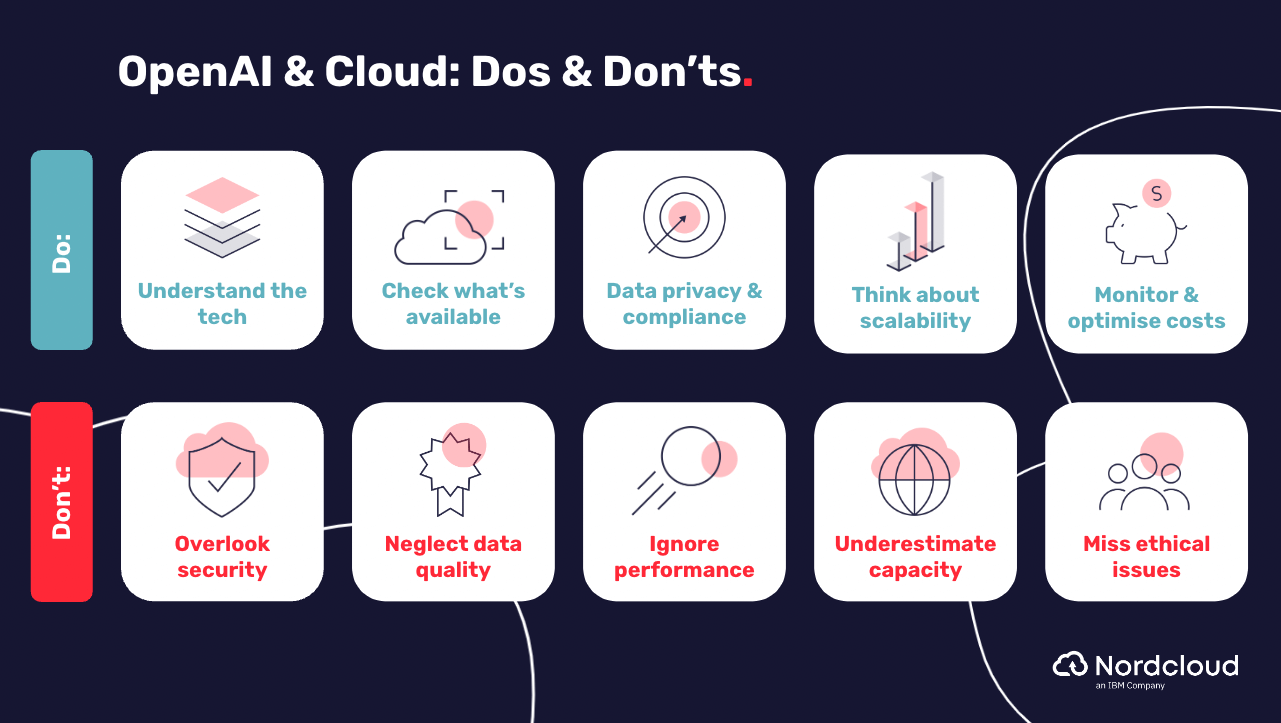

If you’re considering using AI in your cloud setup, let’s get started with a few dos and don'ts for you and your team:

Dos:

Do take time to understand the tech

Familiarise yourself with the capabilities, limitations, and potential use cases of AI to make informed decisions that suit the needs of your business.

Do check what’s available to you

See what your cloud provider(s) are offering that will provide good integration and support with out-of-the-box frameworks and tools, allowing you to test things quickly and easily.

Do ensure data privacy & compliance

You’ve probably heard about some of the dangers out there - it’s important to have robust security measures and keep to data privacy regulations to protect any sensitive data used by AI models.

Do think scalability

Design your cloud infrastructure to scale and accommodate increasing demands for training and expanding with AI.

Do monitor & optimise costs

Continuously monitor resource utilisation and optimise your cloud setup to minimise costs associated with running AI workloads.

Don'ts:

Don’t overlook security

Implement proper access controls, encryption, and monitoring mechanisms to safeguard your AI models and data from unauthorised access.

Don’t neglect data quality

Ensure that the data used to feed AI models is accurate, diverse, and structured to avoid inaccurate, incomplete or misleading results.

Don’t ignore performance power

Optimise your cloud environment, such as leveraging GPU instances or specialised hardware, to achieve optimal performance when training or running AI models.

Don’t underestimate capacity

It’s worth remembering that training complex AI models can be computationally intensive, so allocate sufficient resources to handle the workload.

Don’t forget the ethical considerations

Keep in mind potential ethical concerns, such as bias or misuse of AI, and establish guidelines and processes to address them.

OpenAI Integration Services

We’re here to help with the integration and utilisation of OpenAI on your cloud platforms, so you can power and scale AI applications effectively.

Let’s expand on some of these considerations in a little more detail:

Scalability

You’ll need to ensure that your cloud infrastructure has the ability to dynamically scale resources up and down based on the computational demands of AI models. For example, by using cloud-native auto-scaling capabilities, or container orchestration platforms that automatically adjust the number of instances or containers based on workload.

So think about implementing efficient resource management techniques such as load balancing and distributed computing to distribute the load across multiple instances, ensuring the best utilisation of resources while avoiding bottlenecks.

Integration

As mentioned, the hyperscalers have made integration pretty easy with AI services and APIs. This simplifies the process of integrating AI models into your existing cloud architecture, enabling quicker and smoother development and deployment.

So, if you have specific AI models in mind, assess the compatibility of them with your current infrastructure and services. Ensure that the necessary libraries, frameworks, and tools required supported.

Cost Optimisation

Like any other application, you should monitor and analyse your cloud usage and expenses to identify opportunities for cost optimisation. The hyperscalers offer handy tools for this. Likewise there are tools and services as part of our FinOps approach that would be really handy for gaining insights into resource utilisation and identifying areas of potential waste or inefficiency.

Leverage cost-saving strategies such as using spot instances, which are unused instances available at lower prices, for non-critical workloads. Reserved instances can also provide cost savings for long-term usage. Or consider serverless options for certain AI workloads to pay only for actual usage and avoid idle costs.

Data Capacity

Take time to evaluate the volume and frequency of data transfers between your local environment and the cloud. Optimise data transfer by compressing data before transferring it - making the data smaller in size without losing any important information. This can reduce bandwidth requirements and associated costs.

This is a good use case for edge computing capabilities to process data closer to the source, minimizing the need for large-scale data transfers. This can be useful when dealing with limited bandwidth or when latency is an issue. Hyperscalers offer edge computing tools that can help here.

Need help in building a best-in-class data platform in cloud? Check out this handy guide.

Security & Privacy

You’ll need to implement security measures to protect the confidentiality and integrity of data used with AI models. This includes using encryption for data, implementing access controls and strong authentication mechanisms, and regularly updating patches and software versions.

And always ensure compliance with local data privacy regulations and industry best practices by implementing policies and procedures to govern handling, storage and access. Keep regular security audits and vulnerability assessments to address potential security risks.

Monitoring

It’s worth deploying monitoring solutions to track the performance and health of your AI models in the cloud. There are tools to help you keep track of resource utilisation, latency, response times, and error rates to identify performance bottlenecks and optimise resource allocation.

There are other cloud-native tools to help set up alerts and automated scaling to handle spikes in demand or failures. Implement logging and error tracking to capture and analyse errors or failures in real-time, enabling rapid troubleshooting and resolution.

Have you seen what we’ve been working on already? Check out the two use cases we’ve been working on for OpenAI in the cloud and what we've learned.

Are you ready to realise the potential of AI + cloud for your business?

We’re here to help with the integration and utilisation of AI on your cloud platforms, allowing your teams and developers to make the most of your tools, services, and infrastructure to power and scale AI applications effectively.

See our OpenAI integration services and get in touch here, we’d love to help.

Get in Touch.

Let’s discuss how we can help with your cloud journey. Our experts are standing by to talk about your migration, modernisation, development and skills challenges.