Shared Responsibility & Cloud: Who’s Really Responsible for What?

- What ‘shared responsibility model’ and ‘shared fate model’ actually mean

- The problem with a shared responsibility model

- Why shared responsibility can mean shared confusion

- Will expansion strategies make this problem worse?

- Should this expansion mean greater responsibility for cloud providers?

- Need some help getting it right?

AI is probably a consideration for your company’s digital strategy.

But when implementing these kinds of services with public cloud, who’s really responsible for things like cybersecurity and regulatory compliance, or emissions?

The hyperscalers are developing models to address this issue. Google Cloud, Microsoft and AWS have a “shared responsibility model”, and Google Cloud also has what it calls a “shared fate model”,

These are essentially security and compliance models that tell service providers and users what they need to do to ensure every aspect of the cloud environment is standardised.

There are many factors at play here, and making sure they’re all secure can be confusing. So, what exactly are the questions that cloud shared responsibility models are designed to answer?

What ‘shared responsibility model’ and ‘shared fate model’ actually mean

A shared responsibility model suggests you can negotiate the terms and decide where each responsibility lies. But this is misleading.

Hyperscalers generally describe where their responsibility lies. This is true in their various delivery models (such as SaaS, PaaS, IaaS, and XaaS), and shared responsibility models tend to be no different.

It means the hyperscaler is responsible for certain security elements, but that the user is responsible for all others. So the business needs to know where the gaps are, and how to fill them. Yet these gaps are often unclear.

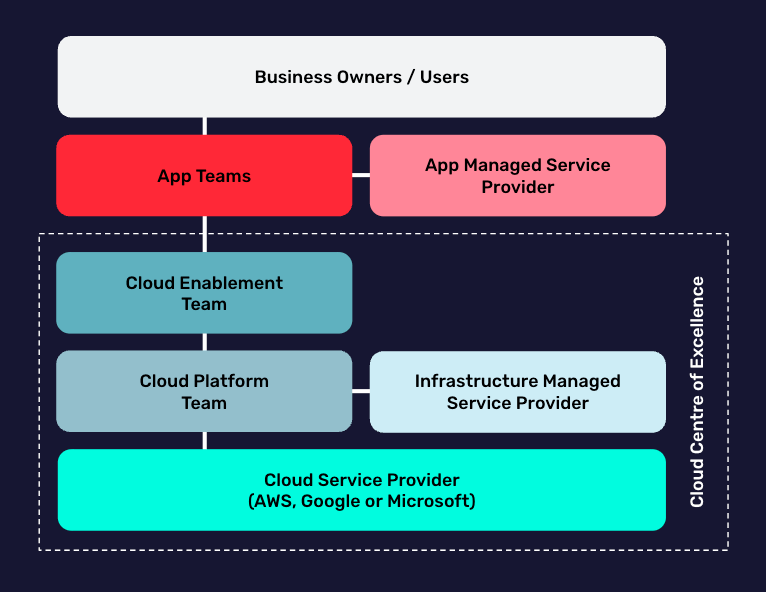

The actual impact of shared responsibility is often not recognised. It’s true to say that cloud providers have an established model that splits responsibility, but the gap appears when there are additional shared responsibility splits internally. This could be between the central cloud team or CCOE delivering the generic cloud platform, and teams running applications in that platform, for example.

Because both the cloud and application teams often use external MSPs to deliver and manage their cloud services, the actual impact of shared responsibility within organisations is often not addressed. This can have wide-reaching implications for security, ESG, AI, or FinOps.

Similarly, the name ‘shared fate model’ could also set the wrong expectations. It suggests you should trust one other to do the right thing. Really, you need to govern shared responsibility way beyond leaving it to ‘fate’. Particularly in regulated industries. A clearly defined responsible cloud strategy is key.

Find out how to address these challenges in our blog Shared Responsibility & AI: What should your business be doing?

Shared Responsibility: What Should Your Business Be Doing?

See the main challenges for businesses and how to address them with strategies and governance.

The problem with a shared responsibility model

According to the shared responsibility model, the cloud provider must monitor and respond to security threats relating to both the cloud itself and its underlying infrastructure. Meanwhile, end users are responsible for protecting data and any other assets they store in the cloud environment.

This has caused confusion. If a business runs its own on-premises data servers, it’s clearly responsible for cybersecurity, reducing emissions from data centres and using AI responsibly.

But store your data with a cloud provider and these become a shared responsibility. Which means cloud workloads – and any applications, data or activity associated with them – are not necessarily fully protected, by the business or the cloud provider. It’s a chink in the cloud’s armour that’s ripe for exposure.

And, with the expansion of generative AI in particular, plus increasingly fragmented cloud architectures and SaaS, this IT risk is becoming more decentralised, and so more fragile.

It means users have been unknowingly running workloads in unprotected (or not fully protected) public clouds, leaving them vulnerable to attacks that target the operating system, data or applications.

So, in a bid to demystify all the confusion surrounding this shared space, let’s take a closer look at exactly whose role it is to ensure breaches don’t happen in the cloud shared responsibility model.

Why shared responsibility can mean shared confusion

It needs to be clearly defined who is actually accountable for security. Just as with emissions, or any other cloud topic for that matter, the consequences of business and functional choices on a technical level need to be transparent, considered and mitigated.

But with so many different parties involved in the responsibility model, transparency of security is complicated.

Formal frameworks usually define who is responsible for what – and this works well for classic cloud services and cloud management processes.

OpenAI & Cloud: 2 Use Cases We Built & What We Learned

Check out the two use cases we’ve been working on for AI in the cloud and what we’ve learned.

But the introduction of AI services like ChatGPT adds a layer of complexity. It’s now far less clear which information is flowing in and out of the service. This raises questions around the possibility of information leakage when using the service. We discussed this in our recent blog Open AI & Cloud: 2 Use Cases We Built & What We Learnt - read it here.

Securing ChatGPT is currently the grey area between cybersecurity and people security – and that needs to be addressed. Which means the formal standard frameworks need to be extended to include that grey area, focusing more on information security, rather than the classic security of the data and the services processing the data in cloud.

Will expansion strategies make this problem worse?

As cloud providers offer more services, products, apps, AI and see greater data gravity, this issue is only going to become more complicated and muti-faceted.

And hyperscalers continue to expand their services horizontally but increasingly also vertically.

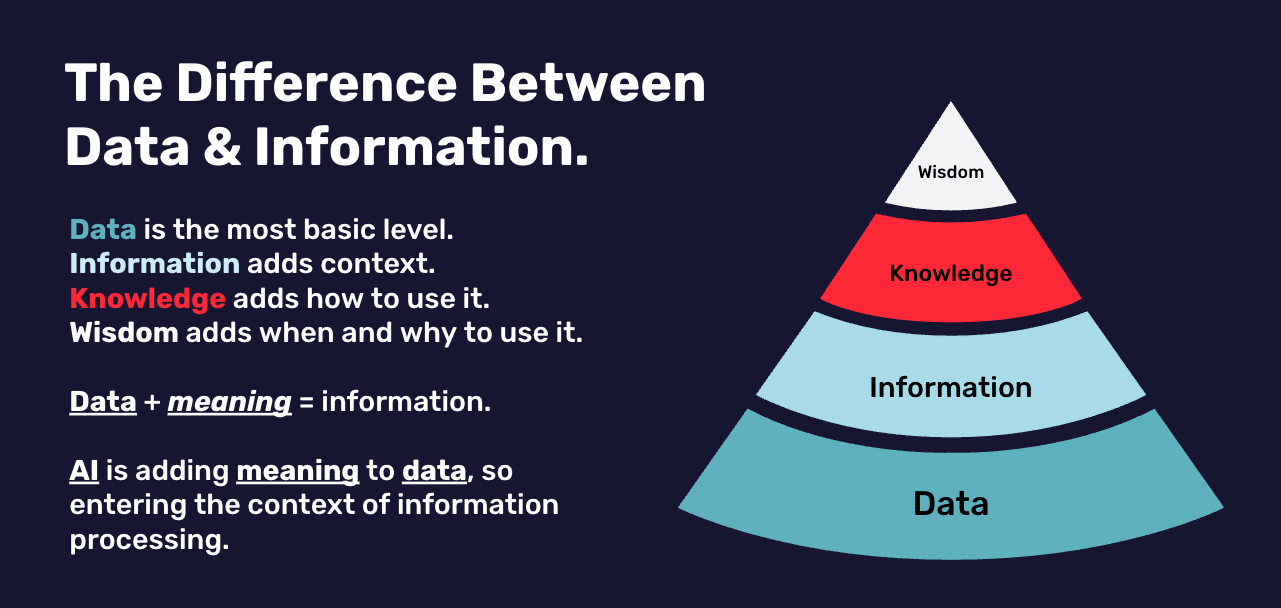

This means they have more technical services available, but also that these are becoming more functional and less technical. Which means they consume and process more data, and that data is becoming progressively less transparent, especially when using AI-related algorithms. And starting to perform information processing activities instead of just processing data. This, in turn, makes proper governance even more challenging.

Standardisation and automation can help with this, but this needs to be reinforced by extending business and IT policies into that grey area, and greater clarity with regard to governance and responsibilities. For example: who will be the data owner (not information owner) when AI running on public cloud is learning and/or processing company information?

Should this expansion mean greater responsibility for cloud providers?

Offering more services to the market does not automatically imply a greater responsibility for the cloud provider.

As a business you might reasonably expect more in terms of broader functionality of the services, a higher degree of security, a better insight into carbon emissions from your cloud provider (did you know running things like AI can have an environmental impact?), and a higher service level. But you can’t totally outsource your own accountability to a third party.

For example, policies and the enforcement of ethical AI use are domains where the business needs to retain ultimate accountability. But there are a number of other critical governance and operational processes beyond these that cannot be outsourced.

Meanwhile, as cloud providers deliver more functional services, more responsibilities automatically arrive at their feet. Similarly, in critical infrastructure terms, regulations like DORA and ECI also come under their responsibilities.

But current developments blur the lines between being a service provider and delivering an actual application, which also requires another delivery model – as cloud providers also become managed service providers (MSPs).

And that means that the CSP is no longer ‘just’ on the bottom of the shared responsibility model, but (also) moves up in the model to the level of MSP, needing additional governance, roles and responsibilities, and perhaps even custom contracts and SLAs to fit their new role when delivering and maintaining AI services.

Need some help getting it right?

Check our other blog Shared Responsibility & Cloud: What should your business be doing?

At Nordcloud, we’ve built our advisory services to partner with organisations and overcome these kinds of challenges. If you’re using cloud, you need to make sure it’s integrated in your business with clear governance, and clear and defined roles and responsibilities.

This includes our Governance, Risk Management and Compliance (GRC) advisory team, which can support you in setting up and running your cloud governance in an effective way.

If you have any questions about this article or would like more information about our advisory services, you can contact me, Sander Nieuwenhuis, Nordcloud GRC Advisory Lead, directly below.

Get in Touch.

Let’s discuss how we can help with your cloud journey. Our experts are standing by to talk about your migration, modernisation, development and skills challenges.