Efficient Custom AMI Workflow Using AWS Backup: A Practical Approach

15 February 2024

There are dozens of tools and hundreds of articles on how to build a custom AMI, nevertheless, you can always have a chance to get a situation when you need to come up with yet another solution. This article is about one such case.

Example case

Just imagine a use case, where you are allowed to use only the “whitelisted” AMI, e.g. ami-xxxxxxxx, that is built and shared by the central cloud division and its “children” created by AWS Backup Service, which have a proper tag, e.g. “aws:backup:source-resource ami-xxxxxxxx“

If you try to use an AMI that is not whitelisted, then a Sentinel check (integrated to the deployment pipeline) fails your deployment.

In our case, the application installation process took too long (about 30 minutes), making it impossible to use an automation procedure that takes a base AMI and install the new application for auto-scaling purposes.

Another possibility is to request a whitelisting of every new custom AMI but this option was not very attractive, because this process is not fast, would slow down our development and test capabilities and reduce the agility of the team. The custom AMI with preinstalled packages is definitely what we needed.

Then we came up with the idea - why not use the AWS Backup Service to bake our custom AMI on demand? We could build as many AMIs in DEV and TEST as we need and whitelist for PROD only the validated and fully tested ones.

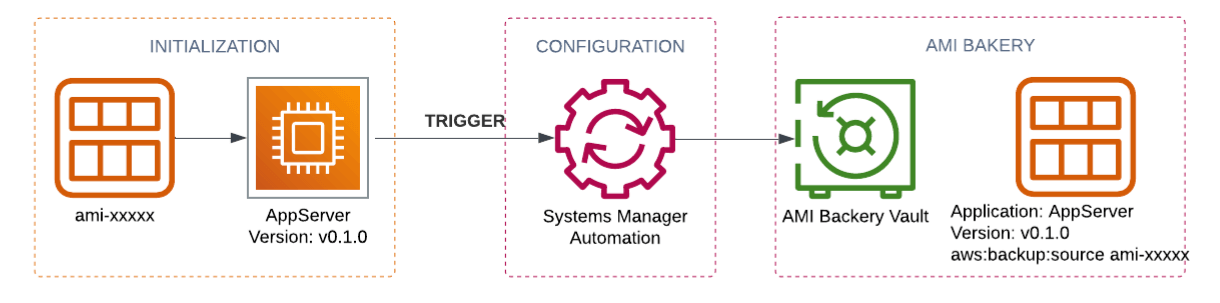

This is the flow that solves our problem:

As you can see from the picture three phases are involved:

- Initialisation

- EC2 is created using the default whitelisted AMI

- Tags with an application type and version are assigned to the EC2 for visibility and are passed to the user-data (not shown on the diagram)

- User-data script triggers an automation document

- Configuration

- All required packages are installed

- Self-checks are completed

- AMI Bakery

- Backup on-demand is triggered by SSM automation

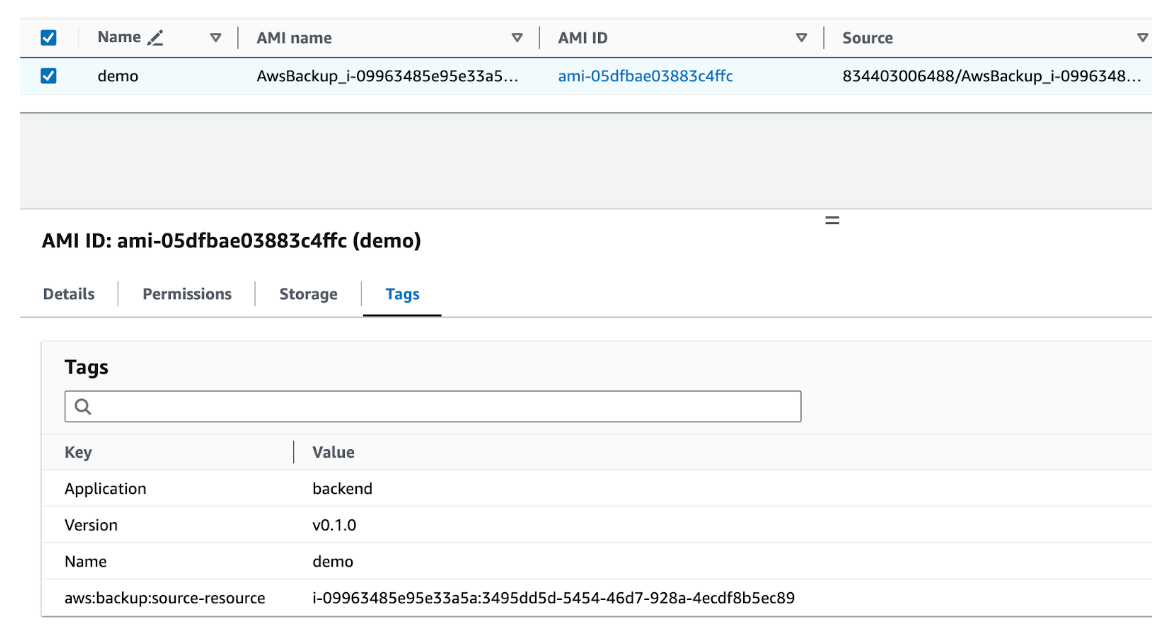

- AMI is created and properly tagged

Technical implementation

Let’s now move to the technical implementation.

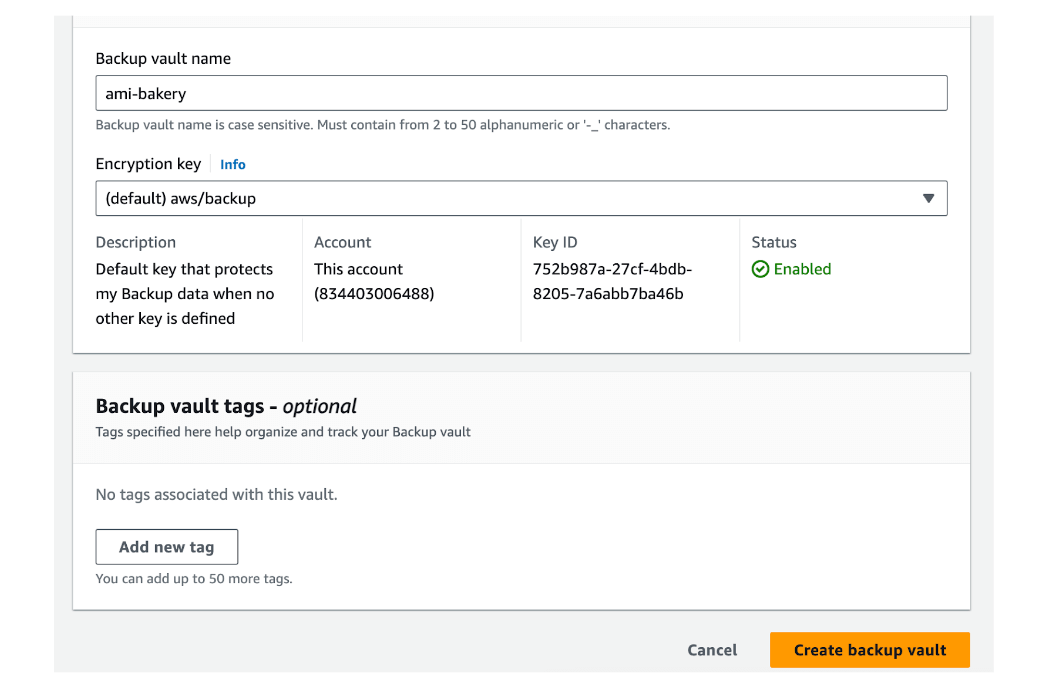

First, we will create a new AWS Backup Vault for our AMIs.

Then we need to create an SSM automation document NewRunbook that includes steps for executing an Ansible playbook on a base VM and requesting an AWS Backup on-demand.

schemaVersion: '0.3'

description: AMI Bakery

parameters:

AutomationAssumeRole:

type: String

description: The ARN of the IAM role that allows Automation to perform the actions on your behalf.

default:

BackupAssumeRole:

type: String

description: The ARN of the IAM role that is used for backup

default:

InstanceId:

type: String

description: base instance id

Application:

type: String

description: Application type

Version:

type: String

description: Application

Source:

type: String

description: URL of ansible module

VaultId:

type: String

description: Backup Vault ID

default: ami-bakery

assumeRole: '{{ AutomationAssumeRole }}'

mainSteps:

- name: InstallPackages

action: aws:runCommand

nextStep: StartBackupJob

isEnd: false

onCancel: Abort

onFailure: Abort

inputs:

DocumentName: AWS-RunAnsiblePlaybook

InstanceIds:

- '{{ InstanceId }}'

Parameters:

playbookurl: '{{ Source }}'

extravars: Application='{{ Application }} Version={{ Version }}'

- name: StartBackupJob

action: aws:executeAwsApi

isEnd: true

inputs:

Service: backup

Api: StartBackupJob

ResourceArn: arn:aws:ec2:{{ global:REGION }}:{{ global:ACCOUNT_ID }}:instance/{{ InstanceId }}

BackupVaultName: '{{ VaultId }}'

IamRoleArn: '{{ BackupAssumeRole }}'

RecoveryPointTags:

Application: '{{ Application }}'

Version: '{{ Version }}'Next, we will create an IAM role that EC2 will assume for executing this automation playbook.

This role should contain a minimally needed set of permissions for executing an SSM document “AWS-RunAnsiblePlaybook” on ec2 instances, assuming a default Backup role “AWSBackupDefaultServiceRole”, starting a Backup job in the previously created vault “ami-bakery” and some additional required rules for listing SSM documents and describing instance information.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor2",

"Effect": "Allow",

"Action": [

"ssm:DescribeInstanceInformation"

],

"Resource": [

"*"

]

},

{

"Effect": "Allow",

"Action": [

"ssm:SendCommand"

],

"Resource": [

"arn:aws:ssm:us-east-1:<account id>:document/AWS-RunAnsiblePlaybook",

"arn:aws:ec2:*:*:instance/*"

]

},

{

"Effect": "Allow",

"Resource": [

"*"

],

"Action": [

"ssm:ListCommands",

"ssm:ListCommandInvocations"

]

},

{

"Effect": "Allow",

"Action": [

"iam:PassRole"

],

"Resource": [

"arn:aws:iam::<account id>:role/service-role/AWSBackupDefaultServiceRole"

]

},

{

"Effect": "Allow",

"Action": [

"backup:StartBackupJob"

],

"Resource": [

"arn:aws:backup:us-east-1:<account id>:backup-vault:ami-bakery"

]

}

]

}

We need to prepare a bash script for the EC2 instance user data that will trigger SSM automation after the VM is launched.

#!/bin/bash

application=backend

version=v0.1.0

codebase_url=https://github.com/my-automation-repo

document_name="arn:aws:ssm:us-east-1:<account id>:document/NewRunbook"

TOKEN=$(curl -s -X PUT "http://169.254.169.254/latest/api/token" -H "X-aws-ec2-metadata-token-ttl-seconds: 21600")

instance_id=$(curl -s http://169.254.169.254/latest/meta-data/instance-id -H "X-aws-ec2-metadata-token: $TOKEN")

aws ssm start-automation-execution --document-name $document_name --parameters="InstanceId=$instance_id,Application=$application,Version=$version,Source=$codebase_url"Lastly, an IAM role for the EC2 instance will be set up with policies allowing SSM automation execution and authorization to run the designated SSM document. It will include an AmazonEC2RoleforSSM policy and a custom one listed below.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"ssm:StartAutomationExecution"

],

"Resource": [

"arn:aws:ssm:us-east-1:<account id>:automation-definition/NewRunbook:$DEFAULT"

]

},

{

"Effect": "Allow",

"Action": [

"iam:PassRole"

],

"Resource": [

"arn:aws:iam::<account id>:role/ami-automation-role"

]

}

]

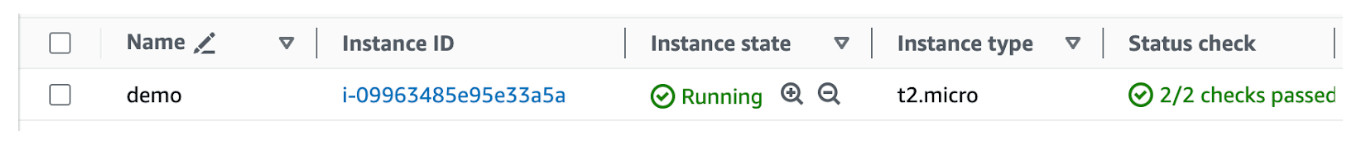

}Upon completion of setup, a new EC2 instance using AWS Linux AMI is launched.

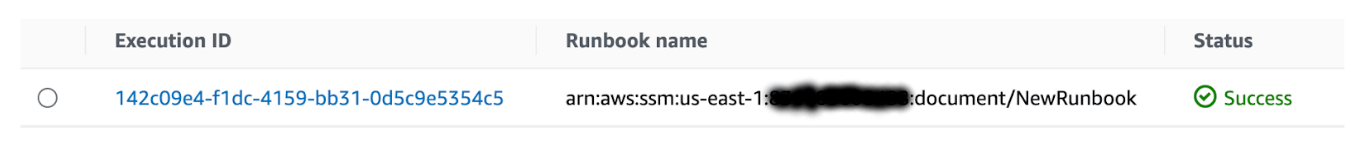

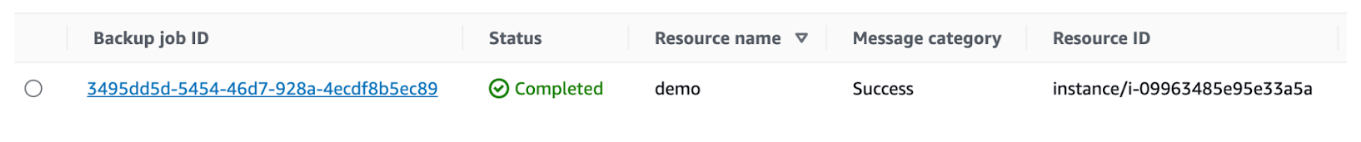

After a few minutes, the successful run of "NewRunbook" is recorded in SSM automation history, a new backup job is completed in the 'ami-bakery' Vault, and a new AMI tagged with all requisite parameters is created.

In conclusion, this innovative approach harnesses the power of AWS Backup to dynamically generate and manage custom AMIs, ensuring compliance with whitelist restrictions. By enabling efficient AMI creation on demand, this method optimises deployment and testing processes, decreasing AMI delivery time from hours or in some cases even days to minutes by eliminating the necessity of going through the manual whitelisting process every build.

Get in Touch.

Let’s discuss how we can help with your cloud journey. Our experts are standing by to talk about your migration, modernisation, development and skills challenges.