Automated Production-Level Solution for Computer Vision Apps on the Edge Using AWS Panorama

In this post, we’ll demonstrate the possibility of using a fully AWS native serverless architecture to deploy computer vision applications leveraging ML model predictions.

With the architecture, we can securely and easily achieve the desired use case by running Computer vision(CV) models on an edge device. Since the framework is highly automated and decoupled, it can be easily adapted and deployed in a week’s fashion, and hence reduce the workload on maintenance.

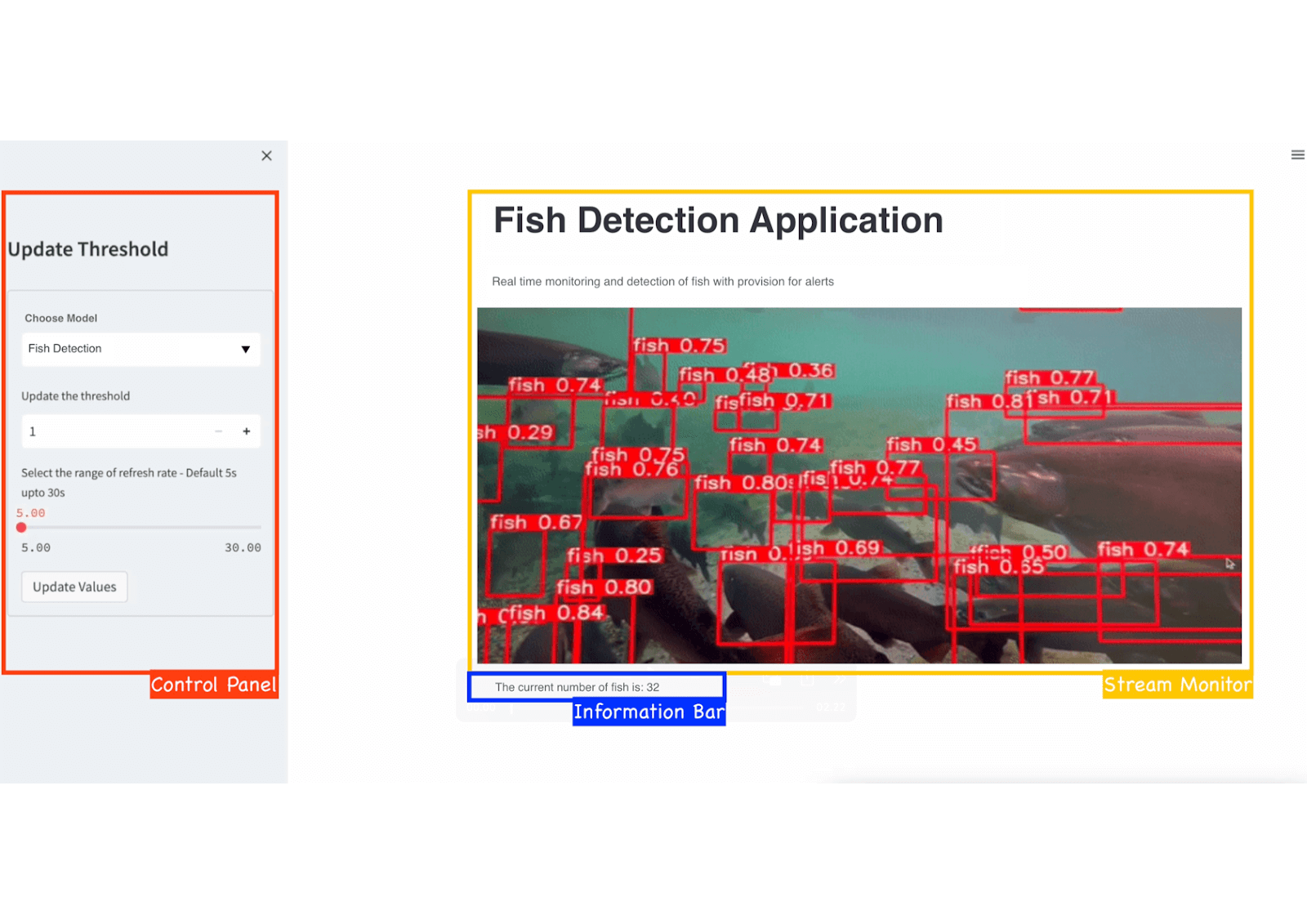

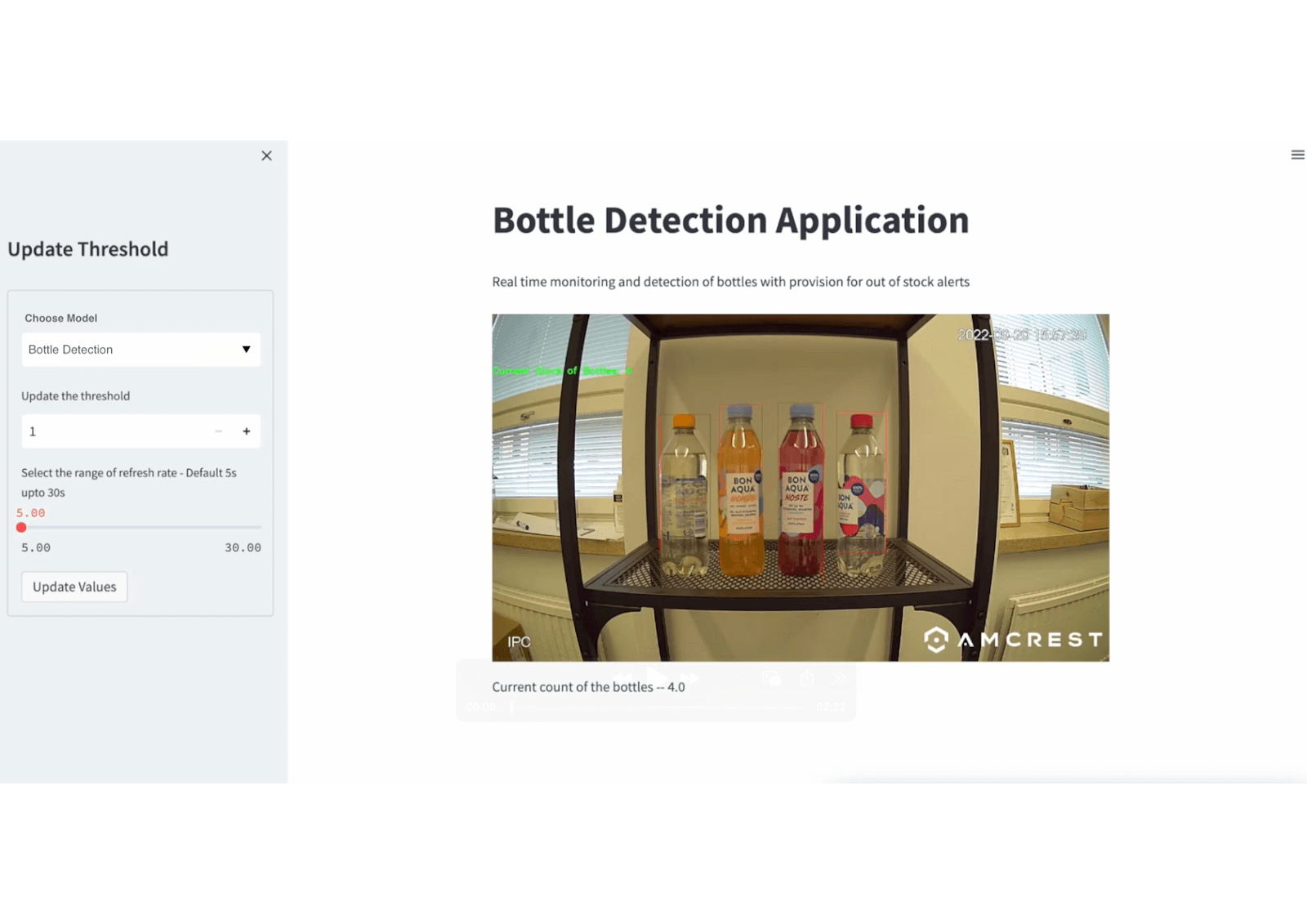

Our architecture allows you to connect one or more models and cameras to the system. The example shows two running models within the framework. The user can choose the prediction on different use cases from the UI. The control panel is customisable. The current control panel has three variables: choose model, update threshold and update refresh rate.

The images below show the example of a model switching between fish detection and bottle detection from the control panel on UI. And the corresponding real time predicted stream is shown.

The given example shows a model for bottle detection with threshold set to 1, and refresh rate to 5 seconds. Meaning when the bottle number on the shelf is lower than 1, the end user will receive an email notification, and the monitor screen will refresh every 5 seconds.

Computer Vision with AWS Panorama

Computer vision (CV) is a branch of artificial intelligence (AI) that discovers useful information from different visual inputs, such as images and videos, hence enabling intelligent monitoring and decision making.

However, in many computer vision applications, sending video data to the cloud for analysis can cause additional delays owing to queuing, propagation and network latency. This can make it impossible to fulfill the speed requirement necessary for real-time applications.

Edge computing, such as AWS Panorama, moves data processing closer to the locations where the data sources are located. This proximity to data at its source can deliver real-time and low-latency decision making, higher scalability, mitigating privacy issues, and allowing operations with low internet bandwidth.

Furthermore, the difficulties in continuous development, deployment, maintenance and administrations challenge the computer vision projects from PoC stage to production-level solutions.

So, together with the Nordcloud team, a solution based on AWS Panorama for production projects has been designed. Nordcloud takes advantage of AWS Panorama to run hardware-accelerated inference on the edge as a fully managed CI/CD and machine learning pipeline are implemented.

Meanwhile, two complex and advanced computer vision applications - Smart fish farming and Personal protection equipment in the HSE systems - from training to deployment, have been targeted based on our solution.

Model training for targeted applications

In our solution, we targeted two applications, smart fish farming and personal protection equipment for HSE systems. The new model is trained with AWS SageMaker. We used a custom dataset and trained YOLOv5 using transfer learning. The model artifact and AWS Panorama application are passed through our CI/CD pipeline to deploy. The trained model fulfills AWS Neo requirements since the model should be compiled through AWS Panorama application deployment.

Smart Fish farming

As in many other production industries, automation in fish processing has focused on eliminating dangerous, difficult, and repetitive tasks. For example, automating the trimming and sorting of defective fish requires advanced technology. Defects in fish can be of natural causes, such as parasites, melanin spots, and disease, or through the shipping or in the processing hall.

To automate the trimming or sorting processes, a machine needs to identify defects, determine their severity, and determine trimming, sorting or treatment actions based on the defect. Real-time decision-making is crucial to identify appropriate action in a fraction of a second.

Sample result from trained model by transfer learning for Fish detection using YOLOv5 model. The model can detect fishes in real-time and counts or crops fishes for future analysis.

Health, Safety and Environment (HSE)

HSE systems and processes create a healthy and safe environment for all workers preventing injuries, and harmful environments. AI and computer vision are behind the magic that is specifically designed to cover health and safety concerns in real-world situations.

Numerous safety scenarios for various industries, including oil and gas, manufacturing, warehousing, and even public places, can be considered. For example, the model can identify personal protective equipment (PPE), including helmets, shoes, gloves, and industrial goggles, or detect heavy and light vehicles along with cranes and work zones to ensure their safety.

Sample result from a person entering a construction site. The trained model can identify the person without a helmet and send alerts.

Sample result of the trained model detecting people working on a construction from a far distance.

Customer benefits

With our solution, we achieve the following benefits:

Real Time Decision Making

AWS Panorama edge device eliminates latency to power real-time decision making

Quick Deployment

Using AWS CDK, it delivers fully automated DevOps pipelines which deploys preconfigured infrastructure in minutes

Shorter iteration time from weeks to hours (saving time and money and increasing agility)

Cost and maintenance reduction

Cost and time effective maintenance based on DevOps best practices

Completely built with AWS native serverless technologies

Scalability

Highly scalable by supporting multiple Panorama devices and cameras

Serverless computing enables horizontal scalability of backend services

Customisable

Quick Model Adaptation for different Computer Vision use cases

A custom tool which can be tailored according to the customer requirements

Security

Extensive built-in security features to secure data privacy

Solution Architecture

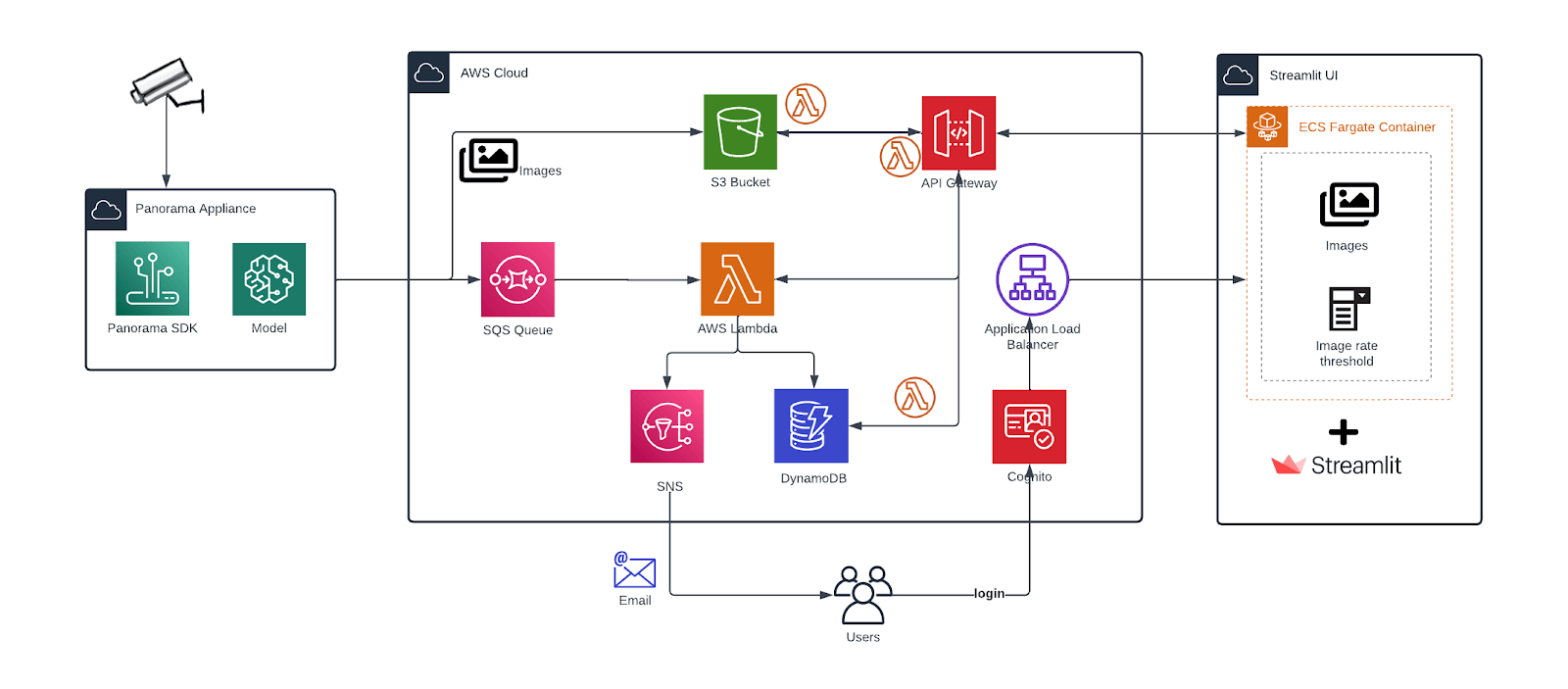

The proposed architecture receives a real-time data stream from a web camera. The Panorama appliance processes the stream using the deployed model, making accurate recognition on the image, the predicted image frame is uploaded to S3, and the payload data that our control panel requires is sent to SQS.

Then, SQS triggers the lambda function, fetches messages, applies customized business logic, and saves the output information into DynamoDB. Lambda sends SNS alerts to the end user based on the payload content. Let’s use an example to better understand this. A smart fishing farm can receive alerts when the number of fish arriving in a particular region of interest increases over time. The fishing times can be determined using this pattern.

The UI ends contain two components: a monitoring window and a control panel. The UI runs on the ECS Fargate where the configurable control panel is deployed. The end user can log in to the UI via AWS Cognito, monitoring the real-time scenes of the object detection.

The control panel on the UI that enables the selection of different models and the key monitor value of the application. A serverless approach is taken in designing the APIs for the control panel so that it can adapt to any custom changes as per the requirements.

With the architecture, it allows connecting with multiple cameras and carrying different models. Edge computing enhances data privacy protection and eases the pain of low network bandwidth. The infrastructure is built with AWS CDK, which means our structure can be easily rebuilt in a new AWS environment.

Automation with AWS CDK

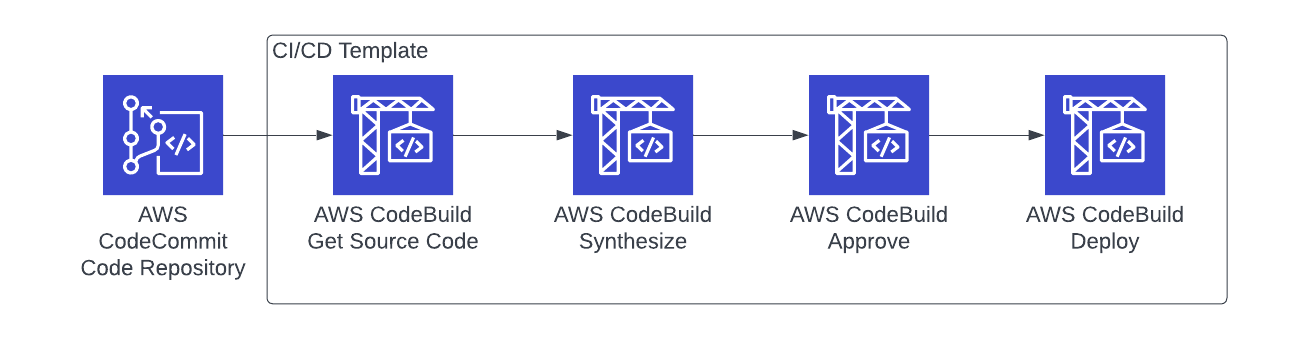

Many computer vision examples give you the code and expect you to make something production ready on your own. Not here! We've created a production ready pipeline with AWS CDK to implement the CI/CD and deploy all the AWS application resources. The whole procedure is managed with AWS Codebuild Projects. To maximize the automation, we also deploy machine learning applications on Panorama using customized scripts.

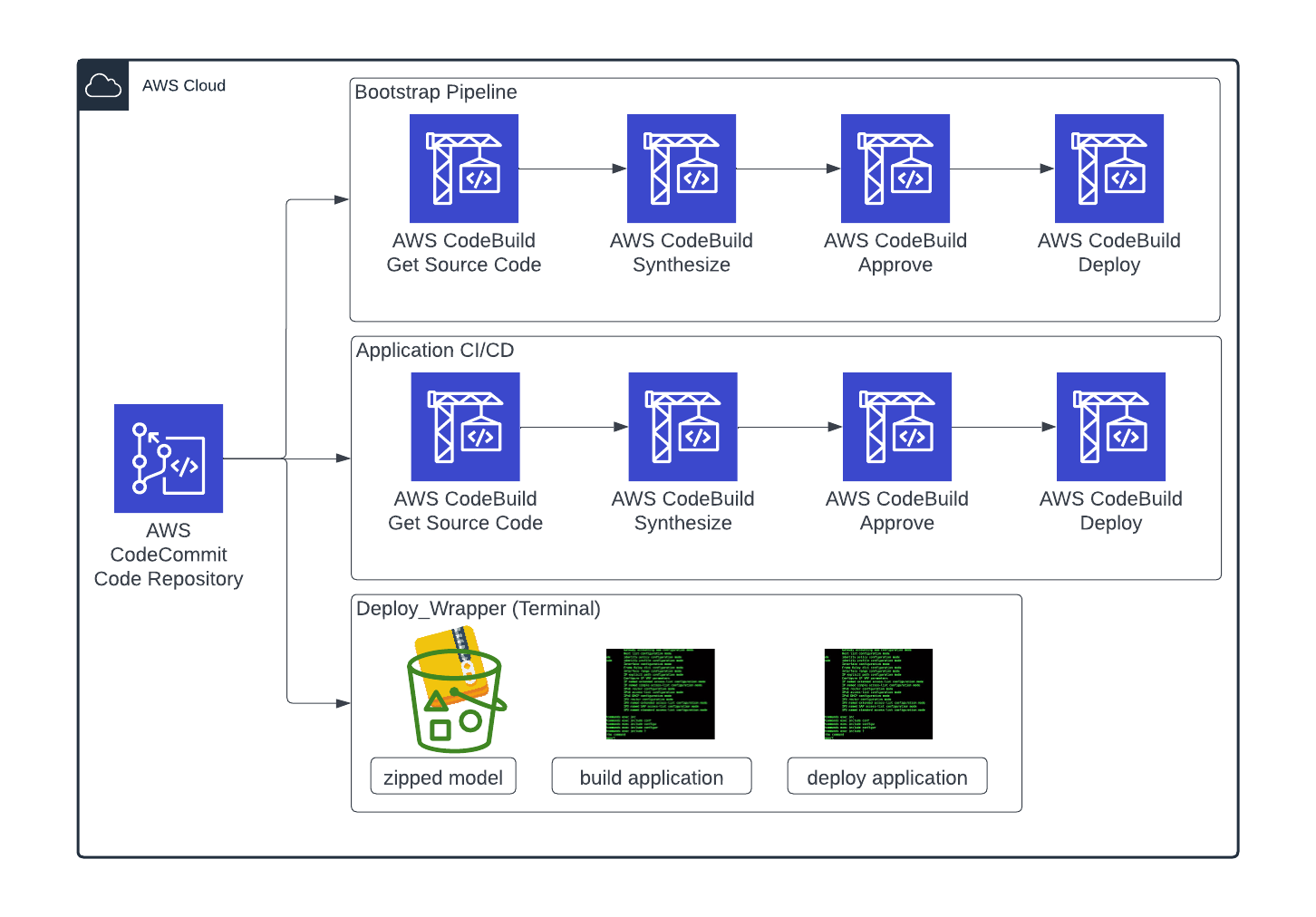

Below you see the architecture of the infrastructure deployment steps, which we’ll explain in more detail:

We chose a mono-repo approach in AWS CodeCommit and from there all pipelines have access to the code and can be triggered based on code changes (merged Pull Requests to be specific). We have three infrastructure deployment components to clearly separate the infrastructure deployments and increase maintainability and scaling:

- Bootstrap Pipeline = deploys all necessary components assuming an empty AWS account

- Application CI/CD = deploys all cloud application resources

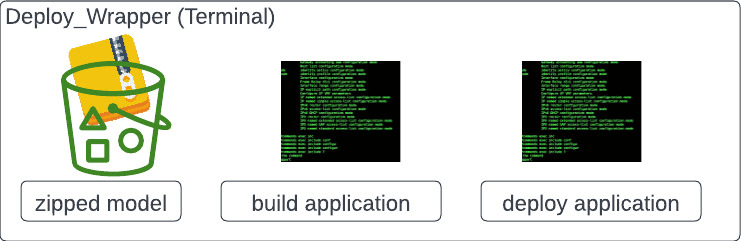

- Deploy Wrapper = deploys the application to the Panorama device and gives information about the Appliance device state.

Infrastructure Deployment Deep Dive

The Get Source Code step takes the codecommit repository and creates an Artifact out of it and provides it as input for the synthesize step. Then the pipeline was approved manually by the developer. The deployment step will deploy the resources after the approval.

The Deploy_Wrapper pipeline shows above contains a set of commands which are wrapped with a Makefile in order to deploy applications to the Panorama Appliance smoothly and get a set of custom information from the Appliance device (e.g. Status of deployed Apps on the Panorama device) by running simple commands.

Conclusion

In this post, we’ve demonstrated the creation of a fully AWS native serverless architecture to deploy computer vision applications leveraging ML model predictions.

With this solution, we securely and easily deploy and run Computer Vision models on an AWS Panorama device. Since the framework is highly automated and decoupled, it can be easily adapted and deployed in a short time to new CV scenarios, and reduce the workload on maintenance.

In the next pilot release, we are looking to improve our UI by adding visual analytics capabilities and to develop a more sophisticated framework for the MLOps procedure, considering even more complex computer vision use cases.

We’re an AWS Premier Consulting Partner, Managed Service Provider and Authorized Training Partner, with over 1,000 AWS certifications in house. So, if you need help developing or deploying computer vision applications on AWS, we’re here to help. Just get in touch with Roman Strachowski, Head of Advanced Data Solutions at Nordcloud to discuss your needs.

Authors

- Subash Prakash - Cloud Data Engineer @ Nordcloud

- Yue Bai - Cloud Data Engineer @ Nordcloud

- Stephan Völkl - Senior Cloud Data Engineer @ Nordcloud

- Salman Khaleghian - Data Scientist @ Nordcloud

- Ilias Biris - Data Solution Architect @ Nordcloud and Tech Lead for Panorama Development

- Ehsan Mohebianfar - Cloud Data Engineer @ Nordcloud and Tech Lead for Panorama Development

Get in Touch.

Let’s discuss how we can help with your cloud journey. Our experts are standing by to talk about your migration, modernisation, development and skills challenges.