Building an Event-Driven Architecture with EventBridge Pipes & Terraform: A Walkthrough Guide

With the fast development of new technologies, the need for tools that can decrease development time and also provide seamless communication between services is growing.

In a recent blog, AWS introduced Amazon EventBridge Pipes. It describes how Amazon EventBridge Pipes guarantees direct and point-to-point integrations between supported source and target, offering capabilities for advanced data transformations and enrichment processes.

In this blog, I'll describe how to implement Amazon EventBridge Pipes between DynamoDB Streams and EventBridge Event Bus using Terraform.

Understanding the Core Mechanics of EventBridge Pipe

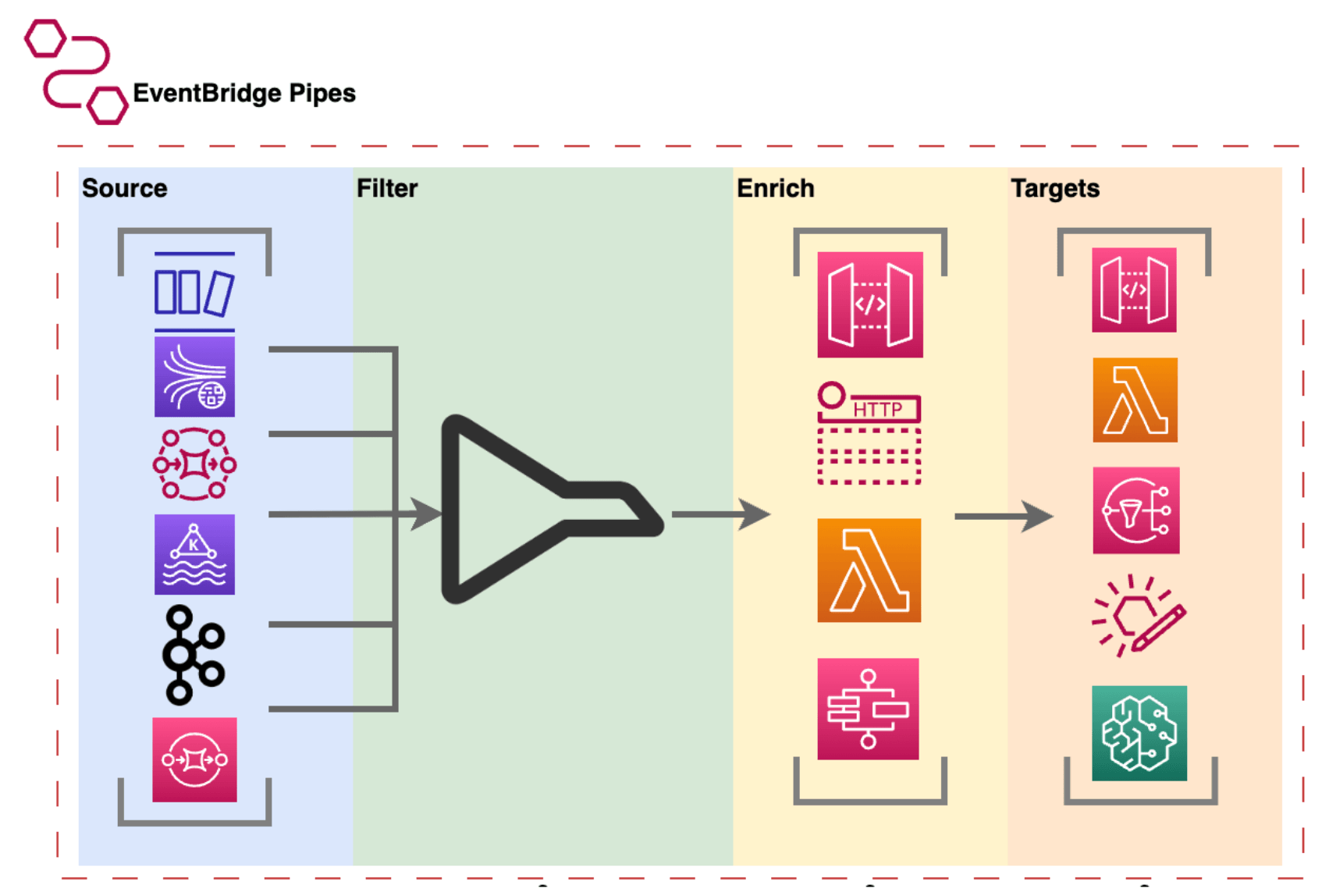

Before diving into the practical part, let’s talk about a few core theoretical concepts of EventBridge Pipes. As mentioned before, EventBridge Pipes guarantees integration between sources and targets and has capabilities to transform, enrich, and filter in flight.

As we can see in the picture below:

According to the above diagram, we can have different variety of sources for pipes such as:

- Amazon DynamoDB stream

- Amazon Kinesis stream

- Amazon MQ broker

- Amazon MSK stream

- Self-managed Apache Kafka stream

- Amazon SQS queue

Moreover, EventBridge Pipes boasts the ability to selectively filter events using criteria similar to those you’d find in an EventBridge event bus or Lambda event source mapping.

For example, we want to filter out only events with certain fields. The example filter criteria below will only process events that have the field message.

{

"dynamodb": {

"NewImage": {

"message": {

"S": [

{

"exists": true

}

]

}

}

}

}Additionally, EventBridge Pipes can transform and enrich events in flight using tools such as:

- API destinations

- Amazon API Gateway

- Lambda functions

- Step Functions state machines

During the enrichment process, Pipe will invoke a Lambda or an API synchronously and will wait for the response to proceed with the execution. It is an important factor because it can slow down the processing time when the response takes too much time.

After receiving, filtering and enriching the data, EventBridge Pipes sends events to the target. Currently, there are a broad set of different target services such as:

- API Gateway

- Kinesis stream

- Lambda function

- EventBridge Bus in the same account and Region

- Amazon SQS queue

- Amazon SNS topic

- CloudWatch log group

- as so on …

With the integration of these four key components, we can effortlessly set up serverless ETL pipelines that scale automatically and are highly responsive to various events.

Implementation

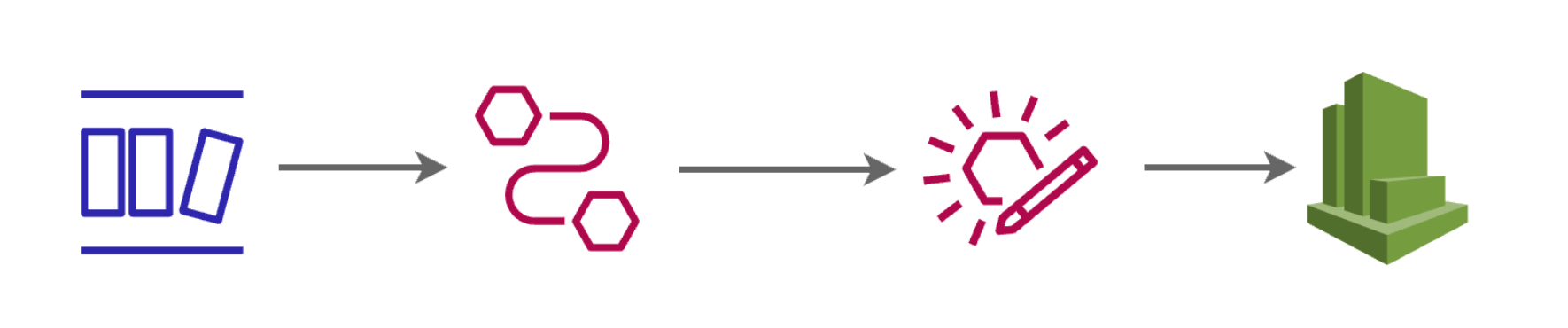

Now let’s talk about how we can implement an architecture consisting of DynamoDB, EventBridge Pipes and EventBridge Bus using Terraform. Below we can see the architecture of our applications:

We’ll funnel data through DynamoDB streams into EventBridge Pipes, which will then forward the data to the EventBridge Bus. Serving as the producer, the EventBridge Bus will dispatch events to multiple consumers for further processing.

1. DynamoDB Setup

First, we define our DynamoDB tables. Here, we’ll execute insert, update, and delete operations to generate various events, which will then be channeled into DynamoDB streams:

module "dynamodb_table" {

source = "terraform-aws-modules/dynamodb-table/aws"

name = "event-bridge-example"

hash_key = "event_id"

stream_view_type = "NEW_AND_OLD_IMAGES"

stream_enabled = true

attributes = [

{

name = "event_id"

type = "N"

}

]

}In the example above, we’re setting up a DynamoDB table with streaming capabilities. This configuration will capture and forward all insert, update, and delete operations to the enabled streams.

2. Source Setup EventBridge Bus

For our target destination, we will use an EventBridge Bus that will receive all events from EventBridge Pipes. We are going to define EventBridge Custom Event Bus using the EventBridge Terraform module:

module "eventbridge" {

source = "terraform-aws-modules/eventbridge/aws"

bus_name = "eventbridge-producer"

}Where at the current moment, we just specify the name of EventBridge Bus that we want to use to forward all events to.

3. Setup the role for EventBridge Pipes

We’ll also configure a role for EventBridge Pipes, granting it the minimum required permissions to interact seamlessly with the specified services:

resource "aws_iam_policy" "policy" {

name = "pipe-policy"

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = [

"dynamodb:DescribeStream",

"dynamodb:GetRecords",

"dynamodb:GetShardIterator",

"dynamodb:ListStreams",

"events:PutEvents"

]

Effect = "Allow"

Resource = "*"

},

]

})

}

resource "aws_iam_role" "pipe_role" {

name = "pipe-role"

description = "Role for pipe"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Sid = ""

Principal = {

Service = "pipes.amazonaws.com"

}

},

]

})

}

resource "aws_iam_role_policy_attachment" "policy_attachment" {

role = aws_iam_role.pipe_role.name

policy_arn = aws_iam_policy.policy.arn

}We allow EventBridge Pipes to assume a role with permission to get records from DynamoDB streams and put records to EventBridge Event Bus.

4. EventBridge Pipes creation

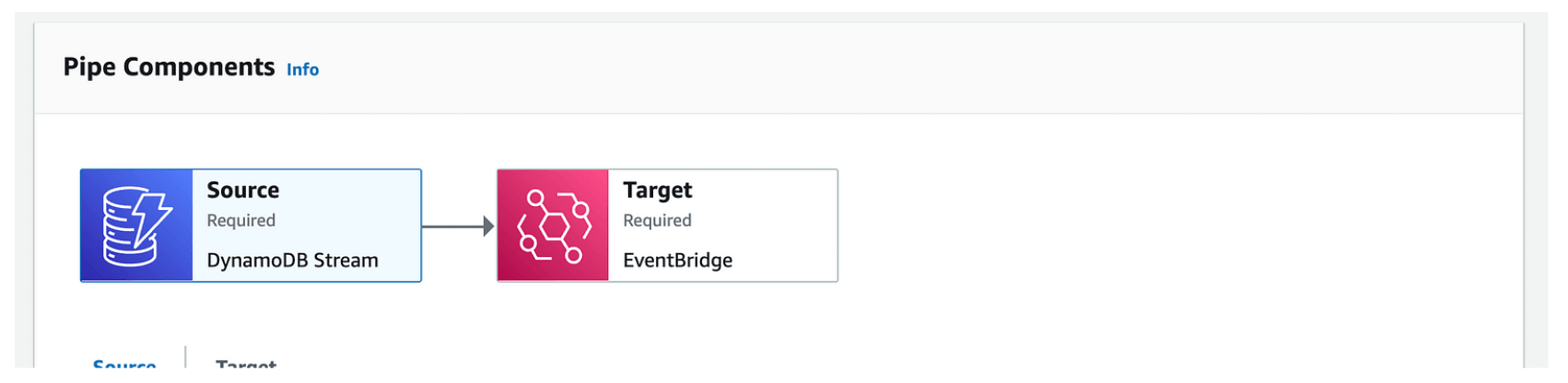

In addition to our source and target we create an IAM role that will be used by EventBridge Pipes, we can finally create a point-to-point connection between them via EventBridge Pipes as follows:

resource "aws_pipes_pipe" "example" {

name = local.pipe_name

role_arn = aws_iam_role.pipe_role.arn

source = module.dynamodb_table.dynamodb_table_stream_arn

target = module.eventbridge.eventbridge_bus_arn

source_parameters {

dynamodb_stream_parameters {

batch_size = 1

starting_position = "LATEST"

}

}

}In the example above, we create an EventBridge Pipe with the name example-pipe and specify the source as DynamoDB stream and target as EventBridge Event Bus. After deploying our Terraform code, we will see the following in our console:

5. Create a rule for EventBridge Event Bus

For testing purposes, we will add a rule for EventBridge Event Bus that will forward our event to CloudWatch log groups as follows:

module "eventbridge" {

source = "terraform-aws-modules/eventbridge/aws"

bus_name = "eventbridge-producer"

rules = {

all_events = {

description = "Capture all events"

event_pattern = jsonencode({ "source" : ["Pipe ${local.pipe_name}"] })

enabled = true

}

targets = {

all_events = {

name = "send-events-to-logs"

arn = aws_cloudwatch_log_stream.all_events.arn

}

}

}

}It's important to understand that we specified the source of events as “Pipe pipe_name”. By specifying this we can ensure that all out events from EventBridge Pipes will be forwarded to CloudWatch Logs.

6. Testing

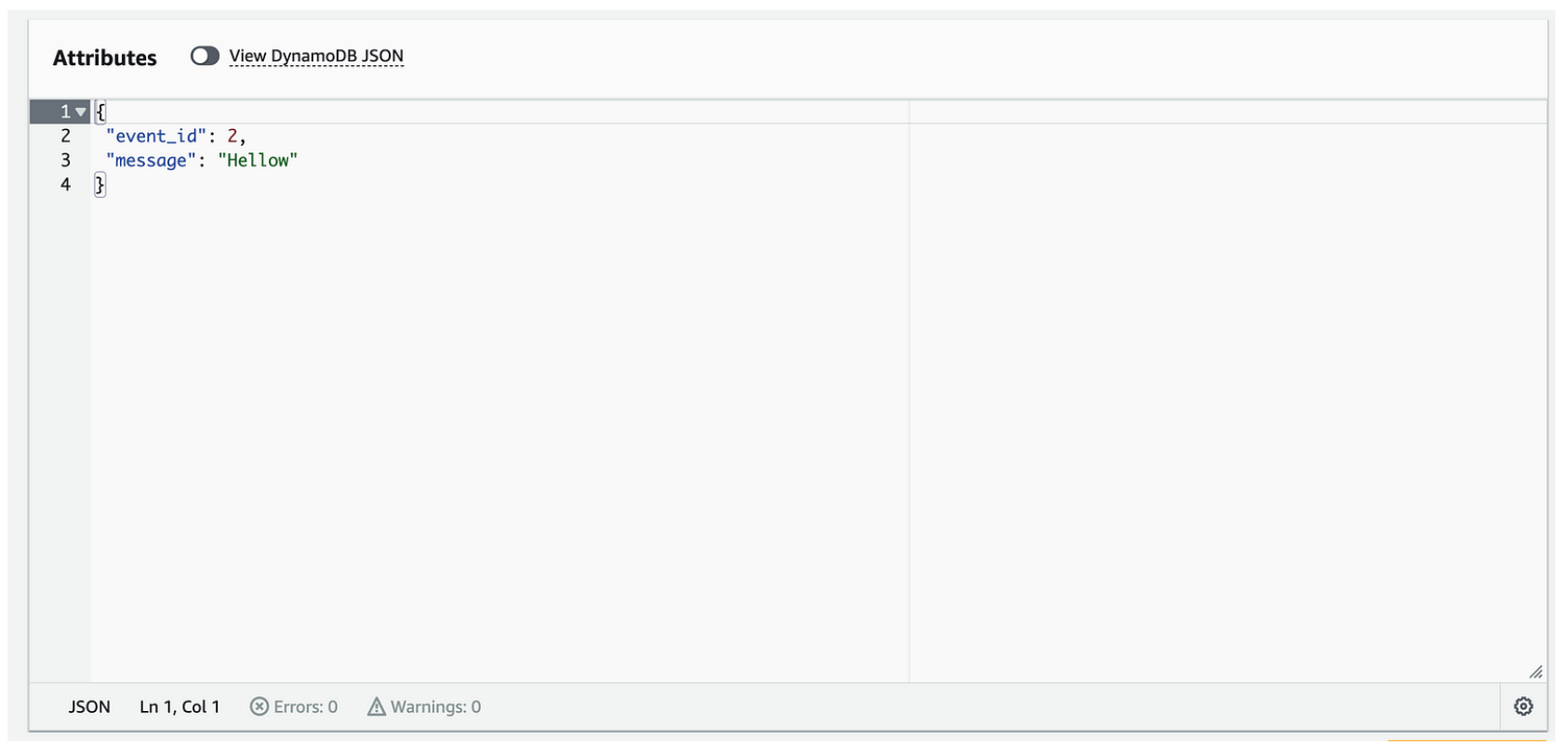

Now we can test out our architecture by creating an INSERT event for the DynamoDB table as shown below:

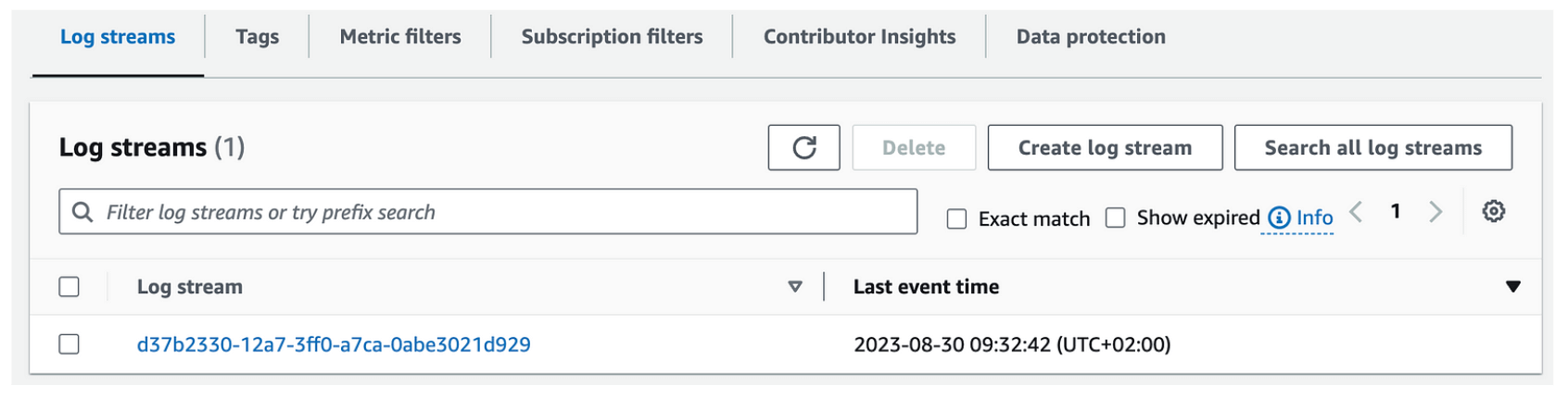

After a few seconds, we can see that our event was forwarded to the CloudWatch Log Group:

The log entry will have the following contents:

{

"version": "0",

"id": "739f378a-94d8-09b3-6caa-1d34f7925edd",

"detail-type": "Event from aws:dynamodb",

"source": "Pipe example-pipe",

"account": "123456789012",

"time": "2023-08-30T07:32:42Z",

"region": "eu-west-1",

"resources": [],

"detail": {

"eventID": "da9338cc8c57306a232d9b87c6333db2",

"eventName": "INSERT",

"eventVersion": "1.1",

"eventSource": "aws:dynamodb",

"awsRegion": "eu-west-1",

"dynamodb": {

"ApproximateCreationDateTime": 1693380762,

"Keys": {

"event_id": {

"N": "2"

}

},

"NewImage": {

"event_id": {

"N": "2"

},

"message": {

"S": "Hellow"

}

},

"SequenceNumber": "100000000060890249608",

"SizeBytes": 33,

"StreamViewType": "NEW_AND_OLD_IMAGES"

},

"eventSourceARN": "arn:aws:dynamodb:eu-west-1:123456789012:table/event-bridge-example/stream/2023-08-30T06:14:20.046"

}

}In the log entry above we can see that the event was created with its values, source, and timestamp. This information can be useful in case of data analysis or replicated data across different sources of information.

Conclusion

We’ve dug into the nuts and bolts of setting up a cool event-driven system using Amazon EventBridge Pipes, DynamoDB, and Terraform. From filtering and transforming events on the fly to effortlessly piping them through to where they need to go, this setup pretty much covers all the bases. We walked through some Terraform code to set up DynamoDB and EventBridge, and even showed you how to monitor the whole thing with CloudWatch.

Long story short, if you’re looking to build something scalable and efficient without breaking a sweat, this guide’s got you covered.

Happy building! 🛠️

Get in Touch.

Let’s discuss how we can help with your cloud journey. Our experts are standing by to talk about your migration, modernisation, development and skills challenges.