An introduction to OpenShift

This is the second blog post in a four-part series aimed at helping IT experts understand how they can leverage the benefits of OpenShift container platform.

In the first blog post I was comparing OpenShift with Kubernetes and showing the benefits of enterprise grade solutions in container orchestration.

This, the second blog post, introduces some of the basic OpenShift concepts and architecture components.

The third blog post is about how to deploy the ARO solution in Azure.

The last blog post covers how to use the ARO/OpenShift solution to host applications.

OpenShift Architecture

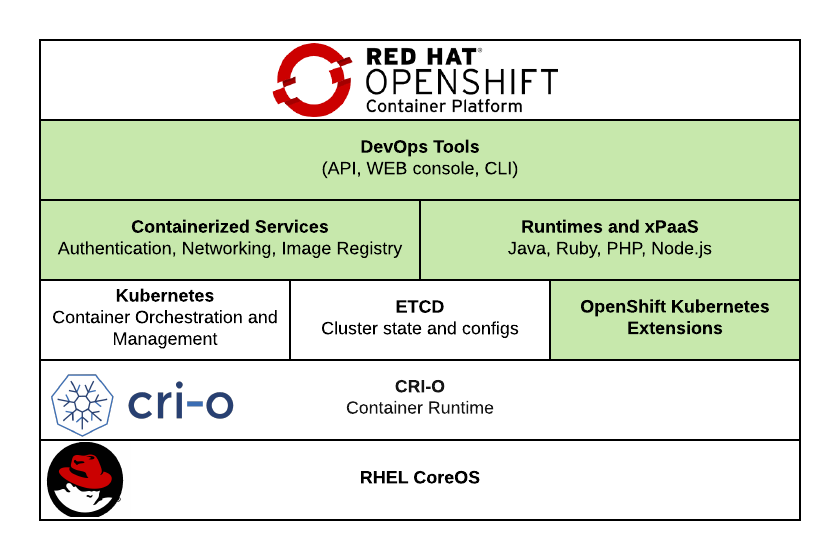

OpenShift is a turn-key enterprise grade, secure and reliable containerisation solution built on open source Kubernetes. It is built on the open source Kubernetes with additional components to provide out of the box self-service, dashboards, automation-CI/CD, container image registry, multilingual support, and other Kubernetes extensions, enterprise grade features.

The following diagram depicts the architecture of the OpenShift Containerisation Platform highlighting with green the components which were added/modified by Red Hat.

Figure 1 - OpenShift container platform Architecture (green ones are modified or new architecture components)

RHEL CoreOS - The base operating system is Red Hat Enterprise Linux CoreOS. CoreOS is a lightweight RHEL version providing essential OS features and combines the ease of over-the-air updates from Container Linux with the Red Hat Enterprise Linux kernel for container hosts.

CRI-O – CRI-O is a lightweight Docker alternative. It’s a Kubernetes Container Runtime Interface enabling to use Open Container Initiative compatible runtimes. CRI-O supports OCI container images from any container registry.

Kubernetes – Kubernetes is the de facto , industry standard container orchestration engine, managing several hosts master and workers to run containers. Kubernetes resources define how applications are built, operated, managed, etc.

ETCD – ETCD is a distributed database of key-value pairs, storing cluster, Kubernetes object configuration and state information.

OpenShift Kubernetes Extensions – OpenShift Kubernetes Extensions are Custom Resource Definitions (CRDs) in the Kubernetes ETCD database, providing additional functionality compared to a vanilla Kubernetes deployment.

Containerized Services – Most internal features run as containers on a Kubernetes environment. These are fulfilling the base infrastructure functions such as networking, authentication, etc.

Runtimes and xPaaS – These are base ready to use container images and templates for developers. A set of base images for JBoss middleware products such as JBoss EAP and ActiveMQ, and for other languages and databases Java, Node.JS, PHP, MongoDB, MySQL, etc.

DevOps Tools – Rest API provides the main point of interaction with the platform. WEB UI, CLI or other third-party CI/CD tools can connect to this API and allow end users to interact with the platform.

With the architecture components described in the previous section, OpenShift platform provides automated development workflows allowing developers to concentrate on business outcomes rather than learning about Kubernetes or containerization in detail.

Main OpenShift components

OpenShift Nodes

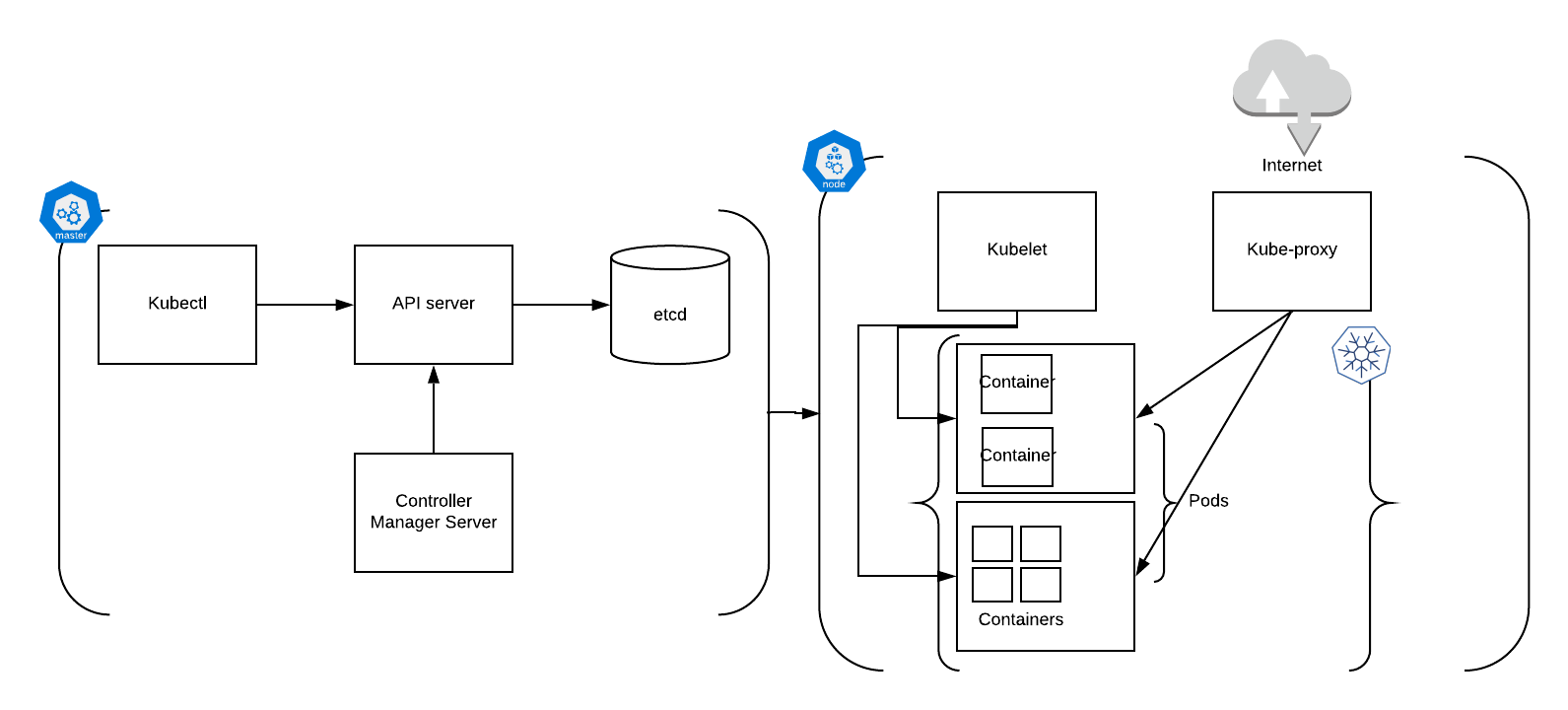

Similarly, to vanilla Kubernetes OpenShift makes distinction between two different node types, cluster master and cluster workers.

Cluster Masters

Cluster Masters are running services required to control the OpenShift cluster such as API Server, etcd and the Controller Manger Server.

The API Server validates and configures Kubernetes objects.

The etcd database stores the object configuration information and state

The Controller Manager Server watches the etcd database for changes and enforces those through the API server on the Kubernetes objects.

Kubelet is the service which manages request connected to local containers on the masters.

CRI-O and Kubelet are running as Systemd managed services.

Cluster Workers

Cluster Workers are running three main services: the Kubelet, Kube-Proxy and the Container Runtime CRI-O. Workers are grouped into MachineSets CRDs.

Kubelet is the service which accepts the requests coming from the Controller Manager Server implementing changes in resources, deploying destroying resources as requested.

Kube-Proxy manages communication to the Pods, and across worker nodes.

CRI-O is the container runtime.

Similarly, to vanilla Kubernetes the smallest object is the Pod in an OpenShift cluster.

MachineSets are custom resources grouping nodes like worker nodes to manage autoscaling and running of Kubernetes compute resources Pods.

High Availability is built into the platform by running control plane services on multiple masters and running application resources in ReplicaSets behind Services on worker nodes.

Operators

Operators are the preferred method of managing services on the OpenShift control plane. Operators integrate with Kubernetes APIs and CLI tools, performing health checks, managing updates, and ensuring that the service/application remains in a specified state.

Platform operators

Operators include critical networking, monitoring and credential services. Platform operators are responsible to manage services related to the entire OpenShift platform. These operators provide an API to allow administrators to configure these components.

Application operators

Application related operators are managed by the Cluster Operator Lifecycle Management. These operators are either Red Hat Operators or Certified operators from third parties and can be used to manage specific application workloads on the clusters.

Projects

Projects are custom resources used in OpenShift to group Kubernetes resources and to provide access for users based on these groupings. Projects can also receive quotas to limit the available resources, number of pods, volumes etc. A project allows a team to organize and manage their workloads in isolation from other teams.

Networking

OpenShift uses Service, Ingress and Route resources to manage network communication between pods and route traffic to the pods from cluster external sources.

Service resource exposes a single IP while load balances traffic between pods sitting behind it within the cluster.

A Route resource provides a DNS record, making the service available to cluster external sources.

The Ingress Operator implements an ingress controller API and enables external access to services running on the OpenShift Container Platform.

Service Mesh

OpenShift Service Mesh provides operational control over the service mesh functionality and a way to connect, secure and monitor microservice applications running on the platform. It is based on the Istio project, using a mesh of envoy proxies in a transparent way providing discovery, load balancing, service-to-service authentication, failure recovery, metrics, and monitoring. The solution also provides A/B testing, canary releases, rate limiting, access control, and end-to-end authentication,

Logging

An integrated Elasticsearch, Fluentd, and Kibana (EFK) provides the cluster wide logging functionality. Fluentd is deployed to each nodes and collecting the all node and container logs writing those to Elastisearch. Kibana is the visualization tool where developers and administrators can create dashboards.

Monitoring

OpenShift has an integrated pre-installed monitoring solution based on the wider Prometheus ecosystem. It monitors cluster components and alerts cluster administrators about issues. It uses Grafana for visualization with dashboards.

Metering

Metering focuses on in-cluster metric data using Prometheus as a default source of information. Metering enables users to do reporting on namespaces, pods and other Kubernetes resources. It allows the generation of Reports with periodic ETL jobs using SQL queries.

Serverless

OpenShift Serverless can Kubernetes native APIs, as well as familiar languages and frameworks, to deploy applications and container workloads. OpenShift Serverless is based on the open source Knative project, providing portability and consistency across hybrid and multi-cloud environments.

Container-native virtualization

Container-native virtualization allows administrators and developers to run and manage virtual machine workloads alongside container workloads. It allows the platform to create and manage Linux and Windows virtual machines, import and clone existing virtual machines. It also provides the functionality of live migration of virtual machines between nodes.

Automation, CI/CD

OpenShift comes with integrated features such as Source-to-Image (S2I) and Image Streams to help developers execute changes on their application much quicker than in an vanilla Kubernetes environment.

Docker build

Docker build image build strategy allows developers with docker containerisation knowledge to define their own Dockerfile based image builds. It expects a repository with a Dockerfile and all required artefacts.

Source-to-Image

Source-to-image can pull code from a repository detecting the necessary runtime and building and starting a base image required to run that specific code in a Pod. If the image successfully gets built, it will be uploaded to the OpenShift internal image registry and the Pod can be deployed on the platform. External tools can be used to implement some of the CI features and extend the OpenShift CI/CD functionality for example with tests.

Image streams

Image streams can be used to detect changes in application code or source images, and force a Pod rebuild/re-deploy action to implement the changes. Image streams groups container images marked by tags and can manage the related container lifecycle accordingly. Image streams can automatically update a deployment if a new base image has been released onto the platform.

OpenShift Pipelines

With OpenShift Pipelines developers and cluster administrators can automate the processes of building, testing and deploying application code to the platform. With pipelines it is possible to minimize human error with a consistent process. A pipeline could include compiling code, unit tests, code analysis, security, installer creation, container build and deployment. Tekton based pipeline definitions are using Kubernetes CRDs (Custom resource Definitions) and the control plane to run pipeline tasks and can be integrated with Jenkins, Knative and others. In OpenShift pipelines each pipeline step is running in it’s own container allowing it to scale independently.

These are the main components and features of OpenShift which help developers and cluster administrators to deliver value to their company and users much faster and easier.

In the next blog post, I will walk through a step-by-step Azure Red Hat OpenShift (ARO) deployment.

How could this work for your business?

Come speak to us and we will walk you through exactly how it works.

Get in Touch.

Let’s discuss how we can help with your cloud journey. Our experts are standing by to talk about your migration, modernisation, development and skills challenges.