Introducing Google Coral Edge TPU – a New Machine Learning ASIC from Google

The Google Coral Edge TPU is a new machine learning ASIC from Google. It performs fast TensorFlow Lite model inferencing with low power usage. We take a quick look at the Coral Dev Board, which includes the TPU chip and is available in online stores now.

Overview

Google Coral is a general-purpose machine learning platform for edge applications. It can execute TensorFlow Lite models that have been trained in the cloud. It’s based on Mendel Linux, Google’s own flavor of Debian.

Object detection is a typical application for Google Coral. If you have a pre-trained machine learning model that detects objects in video streams, you can deploy your model to the Coral Edge TPU and use a local video camera as the input. The TPU will start detecting objects locally, without having to stream the video to the cloud.

The Coral Edge TPU chip is available in several packages. You probably want to buy the standalone Dev Board which includes the System-on-Module (SoM) and is easy to use for development. Alternatively you can buy a separate TPU accelerator device which connects to a PC through a USB, PCIe or M.2 connector. A System-on-Module is also available separately for integrating into custom hardware.

Comparing with AWS DeepLens

Google Coral is in many ways similar to AWS DeepLens. The main difference from a developer’s perspective is that DeepLens integrates to the AWS cloud. You manage your DeepLens devices and deploy your machine learning models using the AWS Console.

Google Coral, on the other hand, is a standalone edge device that doesn’t need a connection to the Google Cloud. In fact, setting up the development board requires performing some very low level operations like connecting a USB serial port and installing firmware.

DeepLens devices are physically consumer-grade plastic boxes and they include fixed video cameras. DeepLens is intended to be used by developers at an office, not integrated into custom products.

Google Coral’s System-on-Module, in contrast, packs the entire system in a 40x48 mm module. That includes all the processing units, networking features, connectors, 1GB of RAM and an 8GB eMMC where the operating system is installed. If you want build a custom hardware solution, you can build it around the Coral SoM.

The Coral Development Board

To get started with Google Coral, you should buy a Dev Board for about $150. The board is similar to Raspberry Pi devices. Once you have installed the board, it only requires a power source and a WiFi connection to operate.

Here are a couple of hints for installing the board for the first time.

- Carefully read the instructions at https://coral.ai/docs/dev-board/get-started/. They take you through all the details of how to use the three different USB ports on the device and how to install the firmware.

- You can use a Mac or a Linux computer but Windows won’t work. The firmware installation is based on a bash script and it also requires some special serial port drivers. They might work in Windows Subsystem for Linux, but using a Mac or a Linux PC is much easier.

- If the USB port doesn’t seem to work, check that you aren’t using a charge-only USB cable. With a proper cable the virtual serial port device will appear on your computer.

- The MDT tool (Mendel Development Tool) didn’t work for us. Instead, we had to use the serial port to login to the Linux system and setup SSH manually.

- The default username/password of Mendel Linux is mendel/mendel. You can use those credentials to login through the serial port but the password doesn’t work through SSH. You’ll need to add your public key to .ssh/authorized_keys.

- You can setup a WiFi network so you won’t need an ethernet cable. The getting started guide has instructions for this.

Once you have a working development board, you might want to take a look at Model Play. It’s an Android application that lets you deploy machine learning models from the cloud to the Coral development board.

Model Play has a separate server installation guide at https://model.gravitylink.com/doc/guide.html. The server must be installed on the Coral development board before you can connect your smartphone to it. You also need to know the local IP address of the development board on your network.

Running Machine Learning Models

Let’s assume you now have a working Coral development board. You can connect to it from your computer with SSH and from your smartphone with the Model Play application.

The getting started guide has instructions for trying out the built-in demonstration application called edgetpu_demo. This application will work without a video camera. It uses a recorded video stream to perform real-time object recognition to detect cars in the video. You can see the output in your web browser.

You can also try out some TensorFlow Lite models through the SSH connection. If you have your own models, check out the documentation on how to make them compatible with the Coral Edge TPU at https://coral.ai/docs/edgetpu/models-intro/.

If you just want to play around with existing models, the Model Play application makes it very easy. Pick one of the provided models and tap the Free button to download it to your device. Then tap the Run button to execute it.

Connecting a Video Camera and Sensors

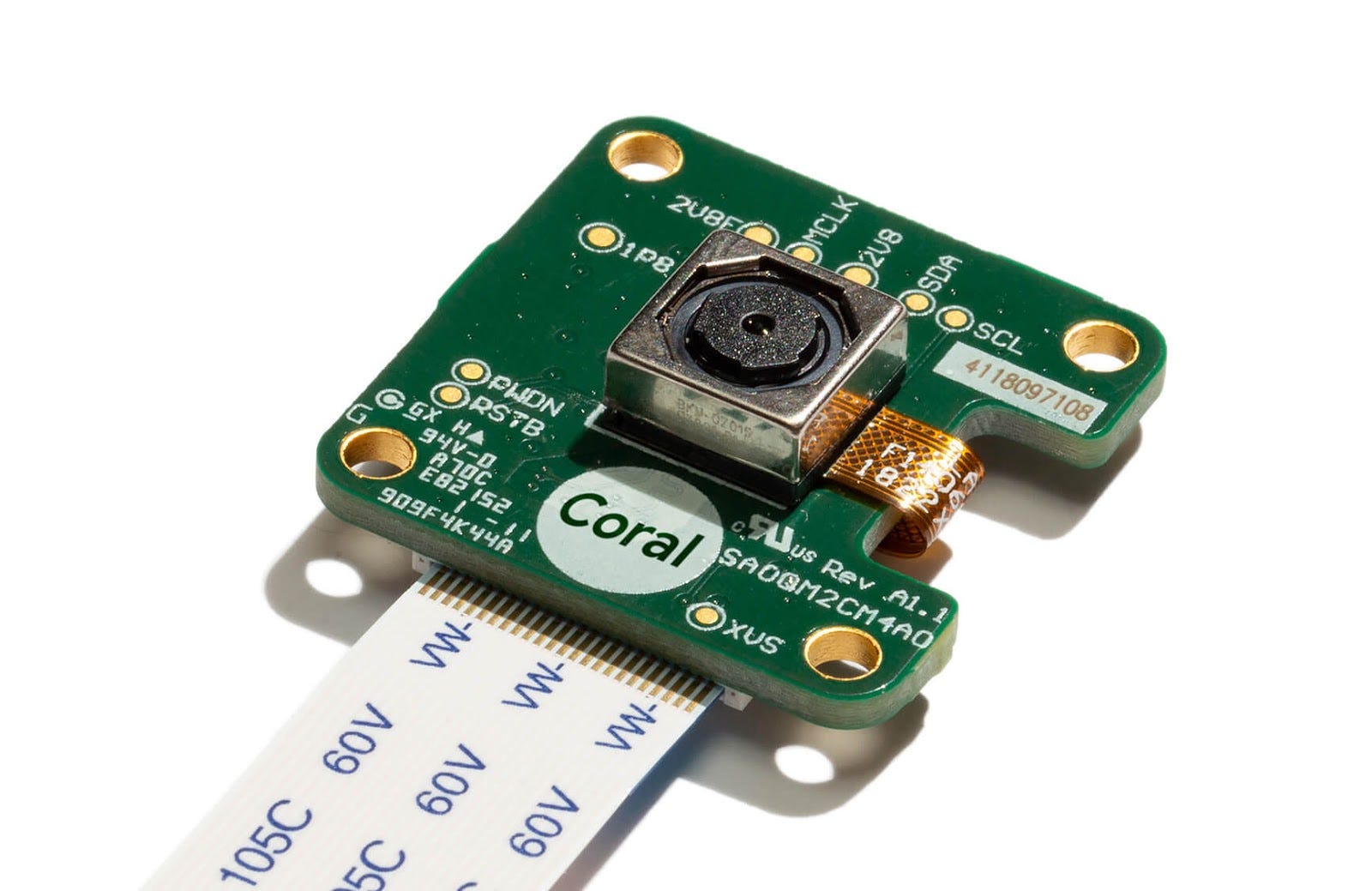

If you buy the Coral development board, make sure to also get the Video Camera and Sensor accessories for about $50 extra. They will let you apply your machine learning models to something more interesting than static video files.

Alternatively you can also use a USB UVC compatible camera. Check the instructions at https://coral.ai/docs/dev-board/camera/#connect-a-usb-camera for details. You can use an HDMI monitor to view the output.

Future of the Edge

Google has partnered with Gravitylink for Coral product distribution. They also make the Model Play application that offers the Coral demos mentioned in this article. Gravitylink is trying to make machine learning fun and easy with simple user interfaces and a directory of pre-trained models.

Once you start developing more serious edge computing applications, you will need to think about issues like remote management and application deployment. At this point it is still unclear whether Google will integrate Coral and Mendel Linux to the Google Cloud Platform. This would involve device authentication, operating system updates and application deployments.

If you start building on Coral right now, you’ll most likely need a custom management solution. We at Nordcloud develop cloud-based management solutions for technologies like AWS Greengrass, AWS IoT and Docker. Feel free to contact us if you need a hand.

Get in Touch.

Let’s discuss how we can help with your cloud journey. Our experts are standing by to talk about your migration, modernisation, development and skills challenges.