Leveraging Azure Bicep for air-gapped deployment of SonarQube

In this article, I'm going to explain how to use Azure Bicep to provision scalable and resilient infrastructure for third party software in Azure.

But first, let me tell you a bit about the project I've been working on. Planning, building, testing, releasing, deploying and operating software without security considerations these days is risky business:

- Teams move fast, sometimes making shortcuts, leaving code smell and technical doubts

- Open source packages are almost in everything that engineers use while making a product with reusable blocks

- Multiple teams are working on different parts of a product or platform that has to be end-to-end tested

- Infrastructure-as-code is an integral part of each cloud environment and it has to be safe, compliant and aligned with the requirements of an organization.

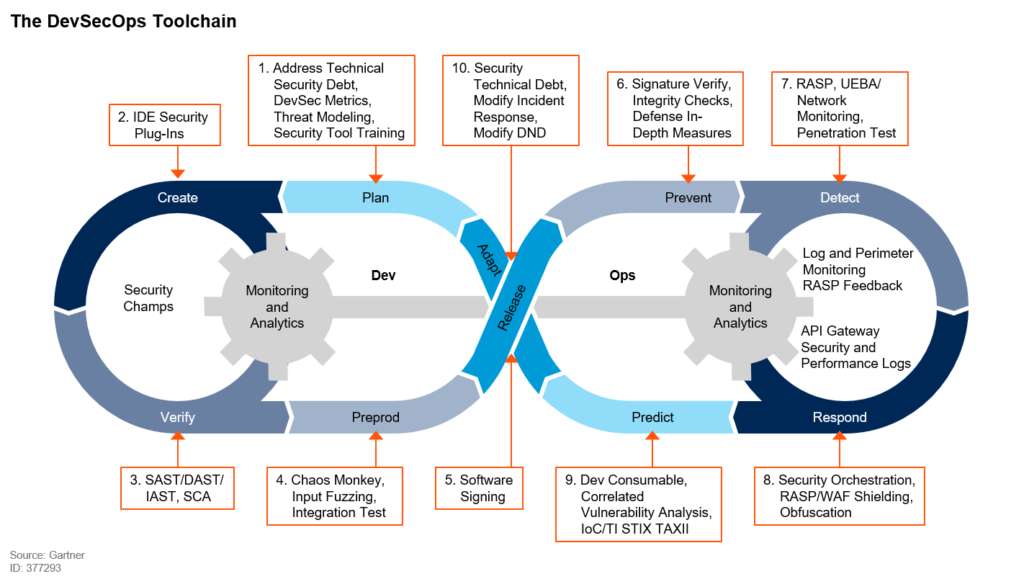

DevSecOps

The best thing that can happen to DevOps is its evolution to DevSecOps.

At Nordcloud, we follow Gartner’s DevSecOps model, which means that we encourage every phase of the Software Development Life Cycle (or SDLC) to be tested and scanned, to enable shift left approach (implement security as early as possible), be secure by design and to utilize security champ programme, and more.

The DevSecOps Toolchain

On one of the projects we were helping to improve the quality and security of source code. Typically this falls under Static Application Security Testing tools (or SAST) "shoulders" and engineers can identify well-known vulnerabilities (for example SQL injection flaws, buffer overflow), security issues, bugs, OWASP Top Ten to highlight the most critical security risks.

We identified risks together with the client, evaluated the market and based on the code base, chose to use Sonar’s solution since it gave us everything we wanted and integrated into the processes with ease.

Developers were happy with Azure DevOps. Most of the deployment targets were in Azure’s cloud; the client chose Bicep over ARM since it was a relatively new environment. We ended up with self hosted SonarQube and agreed on integrating it into their way of working.

SonarQube explained

Sonar is an industry leading solution that enables developers and development teams to write clean code and remediate existing code organically, so they can focus on the work they love and maximize the value they generate for business.

It offers:

- SonarLint: a free IDE plugin available to install from an IDE marketplace (i.e. in SonarLint for Visual Studio Code enables shift left approach)

- SonarCloud: a SaaS offering for the code quality and security of source code

- SonarQube: SonarQube is a self hosted solution for the same purpose (without getting into details, read it like SonarCloud is the same as SonarQube from the functionality standpoint, but self hosted).

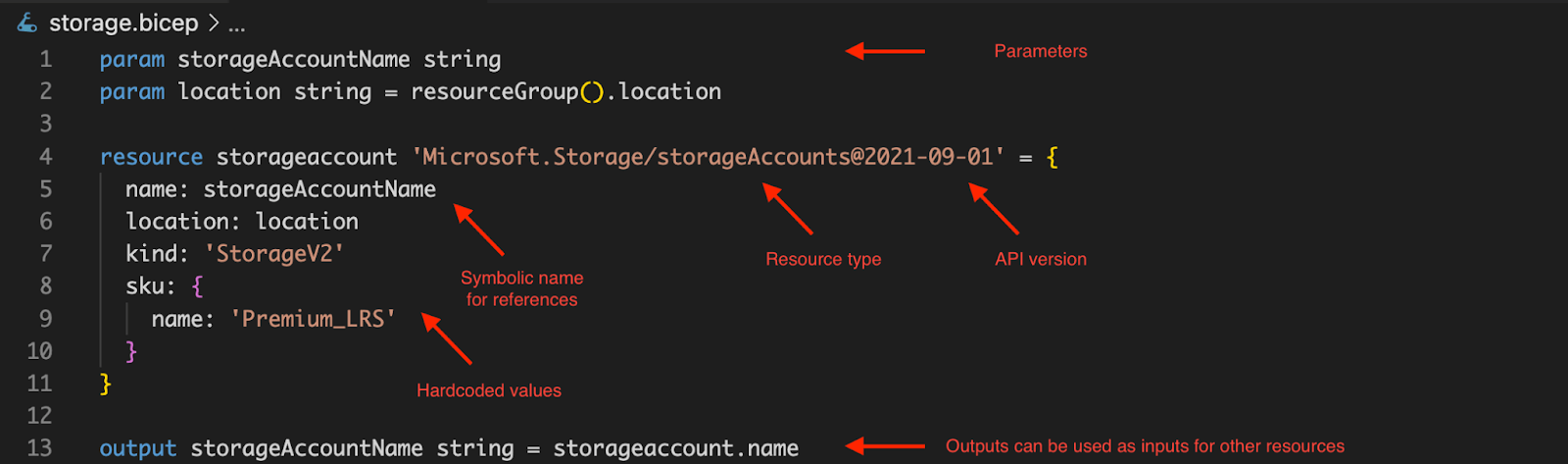

Azure Bicep explained

Bicep is a domain-specific language (DSL) that uses declarative syntax to deploy Azure resources. In a Bicep file, you define the infrastructure you want to deploy to Azure, and then use that file throughout the development lifecycle to repeatedly deploy your infrastructure. Your resources are then deployed in a consistent manner.

While most of my colleagues give preference to terraform, I believe that there are some advantages that Microsoft’s new IAC offers:

- No state hassle (state is stored in Azure Resource Manager) meaning that I can start deploying things to Azure without a subscription (well of course someone will argue and offer terraform cloud or any other backend, but I still believe this is beneficial when you get nothing and need to start provisioning resources; this could be helpful when building up landing zones and setting up core elements like management groups, policies, RBAC etc, for instance.

- Bicep is a first class citizen offering immediate support of everything that is available nowadays in Azure (i.e. preview resources and features, latest and greatest API versions of ARM) while with terraform this could take a while (we’ve bumped into this on several projects and had to come up with some "creative" ideas to overcome it).

- Most of people I showed Bicep code admitted that it is similar to terraform, but much simpler than ARM templates with lots of indents, curly brackets and other “punctuation marks” that quite often lead to syntax fatalities and forces troubleshooting template for ages even with IDE’s linting (at least this is my personal experience).

An example of Bicep file and its structure:

To follow everything below I recommend to check and get familiar with:

Architecture and implementation

Since we did not anticipate a lot of concurrent scans since day one we wanted to start with something small and easy to maintain and operate, but at the same time have the ability to scale on demand without redoing too much.

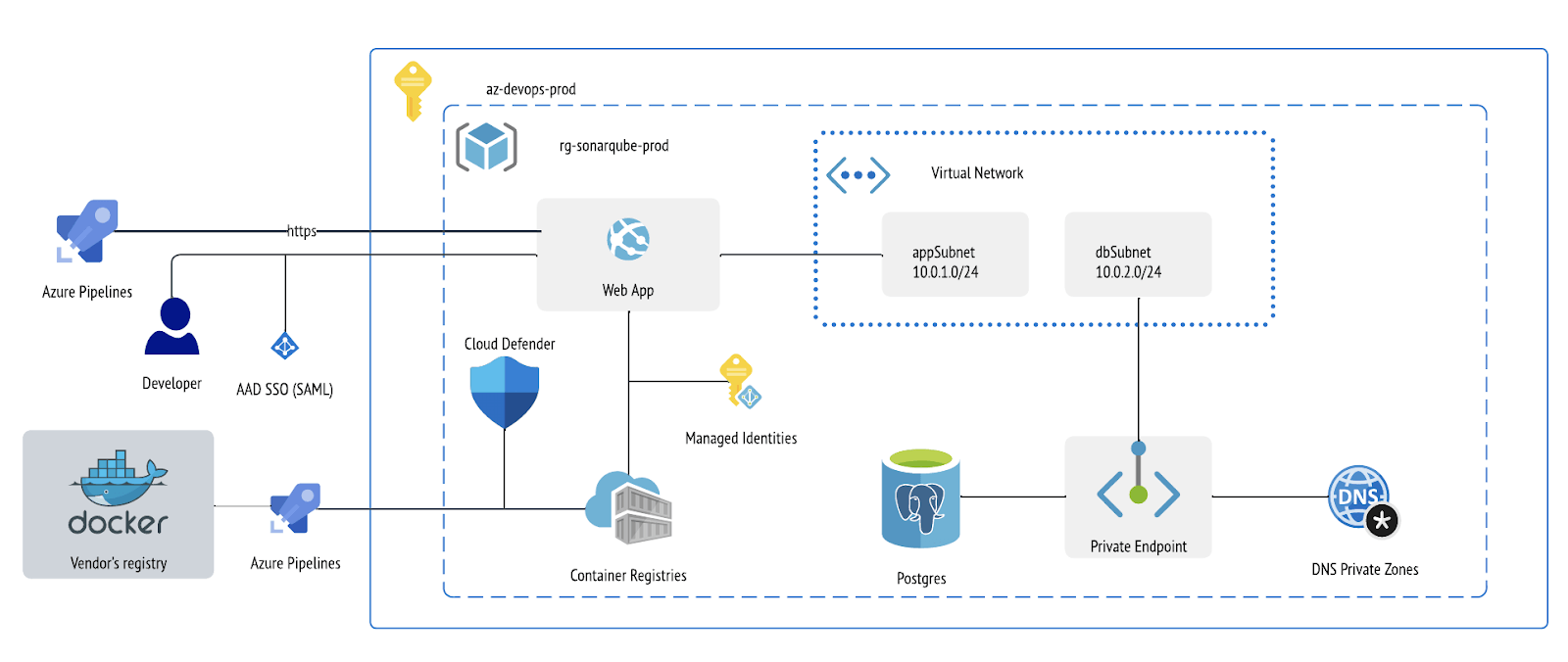

After reviewing platform requirements and product architecture, we first came up with a single VM approach and docker-compose, but dropped the idea after a while, and then changed this with the following diagram (managed services, platform-as-a-service):

SonarQube has the following components:

- Server (that runs GUI, elasticsearch and compute engine)

- Database to store metrics and issues (basically scan results over source code) and instance configuration (i.e. project settings, quality gate profiles etc)

- Scanners (those are either running on agents of Azure DevOps or locally by developers)

Keeping in mind maintenance and operability of the platform, we chose platform-as-a-service (or PaaS) model for core application part (Azure Web Application for Linux service) and managed database Azure PostgreSQL (Flexible Server since it is possible to integrate into virtual network and use private endpoints with private DNS so we could connect application with it’s DB privately, provides granular and as the name suggests flexible DB configuration).

We also wanted to make sure that the container image that we consume in our application is vulnerability free. On top of existing scans by Snyk integration with Dockerhub’s repository of Sonar, we implemented a pipeline in Azure DevOps that is being triggered via REST API on each new image being pushed to the vendor's docker hub repository. Pipeline leverages Cloud Defender and fails with a defined severity of vulnerabilities. Otherwise, a new version of SonarQube image ends up in private repositories Azure Container Registry, that later we test in the development environment and with all tests passed, roll out to production.

We also enabled Single Sign On (SAML) for developers and product owners to access scan results and configuration of projects, quality profiles and findings management.

We wanted to make sure that the solution is cost effective by picking less expensive tiers and SKU for cloud resources in the development environment.

The Bicep template has been published to Azure Quick templates so other engineers can reuse this experience. The template has several common patterns that anyone can use as an inspiration, but also does not contain some of the advanced features that we have implemented in the customer’s environment. Let’s take a closer look at some of them.

Managed Identity with Azure Bicep

As reflected on the diagram above, the image is pulled from the vendor's registry by a special pipeline that brings that image to Azure Container Registry (or ACR). While there are several authentication options for container images in Azure we wanted to follow best practices and picked the most secure, of course. Managed identity was chosen with least privileged permissions (AcrPull builtin role). Let’s put it in code:

resource managedIdentity 'Microsoft.ManagedIdentity/userAssignedIdentities@2018-11-30' = {

name: miName

location: location

}

resource acrPullRoleDefinition 'Microsoft.Authorization/roleDefinitions@2018-01-01-preview' existing = {

scope: subscription()

// This is the AcrPull role, which is used to pull images from ACR. See https://docs.microsoft.com/en-us/azure/role-based-access-control/built-in-roles

name: '7f951dda-4ed3-4680-a7ca-43fe172d538d'

}

resource containerRegistry 'Microsoft.ContainerRegistry/registries@2021-06-01-preview' = {

name: acrName

location: location

sku: {

name: 'Basic'

}

properties: {

adminUserEnabled: false

}

}

resource webApplication 'Microsoft.Web/sites@2021-03-01' = {

name: siteName

location: location

identity: {

type: 'UserAssigned'

userAssignedIdentities: {

'${managedIdentity.id}': {}

}

}

properties: {

virtualNetworkSubnetId: resourceId('Microsoft.Network/virtualNetworks/subnets', virtualNetwork.name, 'appNet')

serverFarmId: appServicePlan.id

httpsOnly: true

siteConfig: {

acrUseManagedIdentityCreds: true

acrUserManagedIdentityID: managedIdentity.properties.clientId

minTlsVersion: '1.2'

ftpsState: 'Disabled'

linuxFxVersion: 'DOCKER|${containerRegistry.properties.loginServer}/sonarqube'

appSettings: [

{

name: 'WEBSITES_PORT'

value: '9000'

}

{

name: 'SONAR_ES_BOOTSTRAP_CHECKS_DISABLE'

value: 'true'

}

{

name: 'SONAR_JDBC_URL'

value: 'jdbc:postgresql://${postgresFlexibleServers.properties.fullyQualifiedDomainName}:5432/postgres'

}

{

name: 'SONAR_JDBC_USERNAME'

value: administratorLogin

}

{

name: 'SONAR_JDBC_PASSWORD'

value: administratorLoginPassword

}

]

}

}

}

resource roleAssignment 'Microsoft.Authorization/roleAssignments@2020-04-01-preview' = {

name: guid(managedIdentity.id, acrName, acrPullRoleDefinition.id)

properties: {

roleDefinitionId: acrPullRoleDefinition.id

principalId: managedIdentity.properties.principalId

principalType: 'ServicePrincipal'

}

}

The code above contains shrunk bicep template of the following resources:

- Azure Managed identity

- Role definition with AcrPull builtin role

- Azure Container Registry in Basic SKU

- Web application (server farm) with references to Managed Identity

- Role assignment

Credentials management with pipelines and Bicep

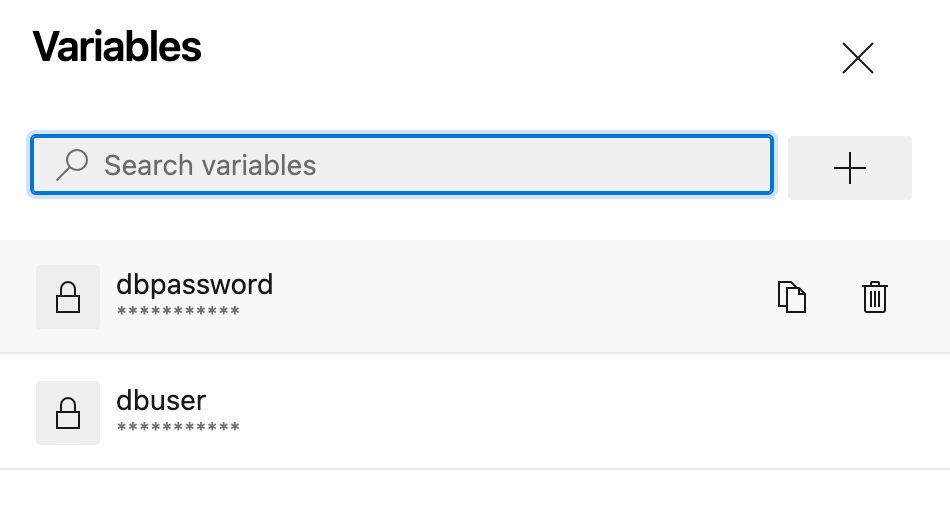

Azure PostgreSQL expects user and password prior to deployment. One of the ways would be to provide it the moment we start the deployment (as secure string), the other option would be reusing existing secrets from Azure KeyVault and by extracting such a string with getSecret function and passing it over to secure string param. But we were looking for something similar to a random password resource from a random provider because there is no reason to access PostgreSQL directly (exception would be some sort of troubleshooting, manual dump / restore, but we did not anticipate this). Also based on this issue it won’t be addressed any soon. So we ended up passing a password from the environment variables of Azure DevOps and then picked it up as deployment’s parameter and used further (1 - store in Azure Key Vault; 2 - use as username/password).

- task: AzureCLI@2

inputs:

azureSubscription: <serviceConnection>

scriptType: bash

scriptLocation: inlineScript

inlineScript: |

az deployment group create \

--name <deploymentName> \

-g <resourceGroupName> \

--template-file main.bicep \

--parameters main.parameters.dev.json \

--parameters administratorLogin=$(dbuser) administratorLoginPassword=$(dbpassword)

In this case dbuser and dbpassword are set as variables via Azure DevOps GUI:

Azure DevOps pipeline variables

And then so the actual bicep is as follows:

@secure()

param administratorLogin string

@secure()

param administratorLoginPassword string

resource postgresFlexibleServers 'Microsoft.DBforPostgreSQL/flexibleServers@2021-06-01' = {

name: postgresFlexibleServersName

location: location

sku: {

name: postgresFlexibleServersSkuName

tier: postgresFlexibleServersSkuTier

}

properties: {

administratorLogin: administratorLogin

administratorLoginPassword: administratorLoginPassword

createMode: createMode

network: {

delegatedSubnetResourceId: resourceId('Microsoft.Network/virtualNetworks/subnets', virtualNetwork.name, 'dbNet')

privateDnsZoneArmResourceId: privateDNSZone.id

}

storage: {

storageSizeGB: 32

}

backup: {

backupRetentionDays: 7

geoRedundantBackup: 'Disabled'

}

highAvailability: {

mode: 'Disabled'

}

maintenanceWindow: {

customWindow: 'Disabled'

dayOfWeek: 0

startHour: 0

startMinute: 0

}

version: postgresFlexibleServersversion

}

dependsOn: [

privateDNSZoneLink

]

}

resource keyVault 'Microsoft.KeyVault/vaults@2021-10-01' = {

name: keyvaultName

location: location

tags: tags

properties: {

enabledForDeployment: true

enabledForTemplateDeployment: true

enabledForDiskEncryption: true

tenantId: tenantId

sku: {

name: keyvaultSkuName

family: keyvaultSkuFamily

}

accessPolicies: [for objectId in objectIds: {

tenantId: tenantId

objectId: objectId

permissions: {

secrets: [

'all'

]

}

}]

}

}

resource user 'Microsoft.KeyVault/vaults/secrets@2021-11-01-preview' = {

name: 'dbuser'

parent: keyVault

properties: {

attributes: {

enabled: true

exp: 30

nbf: 30

}

value: administratorLogin

}

}

resource password 'Microsoft.KeyVault/vaults/secrets@2021-11-01-preview' = {

name: 'dbpassword'

parent: keyVault

properties: {

attributes: {

enabled: true

exp: 30

nbf: 30

}

value: administratorLoginPassword

}

}

The code above contains shrunk bicep template of the following resources:

- Azure Database for PostgreSQL - Flexible Server

- Azure Key Vault and secret resources

Environments segregation

Typically you want at least two separate environments (development and production) where at first you do all sorts of experiments including new feature testing, patches, bug fixing, etc and the second you offer to use for the end users.

While there are several techniques to approach environment segregation and these may differ from team to team, tool to tool and organization to organization, for Azure Bicep works we chose to use modules (so entire SonarQube is considered as single module) and depending on the environment we feed each deployment with its own set of parameters. Here is the code:

Parameters:

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentParameters.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"environmentConfigMap": {

"value": {

"Production": {

"postgres": {

"sku": {

"name": "Standard_D4s_v3",

"tier": "GeneralPurpose"

}

},

"appServicePlan": {

"sku": {

"name": "B3",

"capacity": 3

}

}

},

"NonProduction": {

"postgres": {

"sku": {

"name": "Standard_B1ms",

"tier": "Burstable"

}

},

"appServicePlan": {

"sku": {

"name": "B1",

"capacity": 1

}

}

}

}

}

}

}

Securing security tool

While Azure Web Application offers a nice endpoint (also FQDN) so anyone can access it directly, we decided to put it behind the load balancer with Web Application Firewall (WAF) using Azure Front Doors with managed WAF policies so anyone/anything accessing our instance evaluated against OWASP Top Ten and other builtin policies. Since Sonar is Java based application we were specifically interested in Java attacks rule sets and its protection.

resource frontDoor 'Microsoft.Network/frontDoors@2020-05-01' = {

name: frontDoorName

location: 'global'

properties: {

enabledState: 'Enabled'

frontendEndpoints: [

{

name: frontEndEndpointName

properties: {

hostName: '${frontDoorName}.azurefd.net'

sessionAffinityEnabledState: 'Disabled'

webApplicationFirewallPolicyLink: {

id: wafPolicy.id

}

}

}

]

loadBalancingSettings: [

{

name: loadBalancingSettingsName

properties: {

sampleSize: 4

successfulSamplesRequired: 2

}

}

]

healthProbeSettings: [

{

name: healthProbeSettingsName

properties: {

path: '/'

protocol: 'Https'

intervalInSeconds: 120

}

}

]

backendPools: [

{

name: backendPoolName

properties: {

backends: [

{

address: webApplication.properties.defaultHostName

backendHostHeader: webApplication.properties.defaultHostName

httpPort: 80

httpsPort: 443

weight: 50

priority: 1

enabledState: 'Enabled'

}

]

loadBalancingSettings: {

id: resourceId('Microsoft.Network/frontDoors/loadBalancingSettings', frontDoorName, loadBalancingSettingsName)

}

healthProbeSettings: {

id: resourceId('Microsoft.Network/frontDoors/healthProbeSettings', frontDoorName, healthProbeSettingsName)

}

}

}

]

routingRules: [

{

name: routingRuleName

properties: {

frontendEndpoints: [

{

id: resourceId('Microsoft.Network/frontDoors/frontEndEndpoints', frontDoorName, frontEndEndpointName)

}

]

acceptedProtocols: [

'Https'

]

patternsToMatch: [

'/*'

]

routeConfiguration: {

'@odata.type': '#Microsoft.Azure.FrontDoor.Models.FrontdoorForwardingConfiguration'

forwardingProtocol: 'MatchRequest'

backendPool: {

id: resourceId('Microsoft.Network/frontDoors/backEndPools', frontDoorName, backendPoolName)

}

}

enabledState: 'Enabled'

}

}

]

}

}

resource wafPolicy 'Microsoft.Network/FrontDoorWebApplicationFirewallPolicies@2020-11-01' = {

name: wafPolicyName

location: 'global'

properties: {

policySettings: {

mode: 'Prevention'

enabledState: 'Enabled'

}

managedRules: {

managedRuleSets: [

{

ruleSetType: 'DefaultRuleSet'

ruleSetVersion: '1.0'

}

]

}

}

}

Code above contains Azure Front Door with a WAF policy example.

Integration with DevOps platform

DevOps way of working with Sonar's solutions

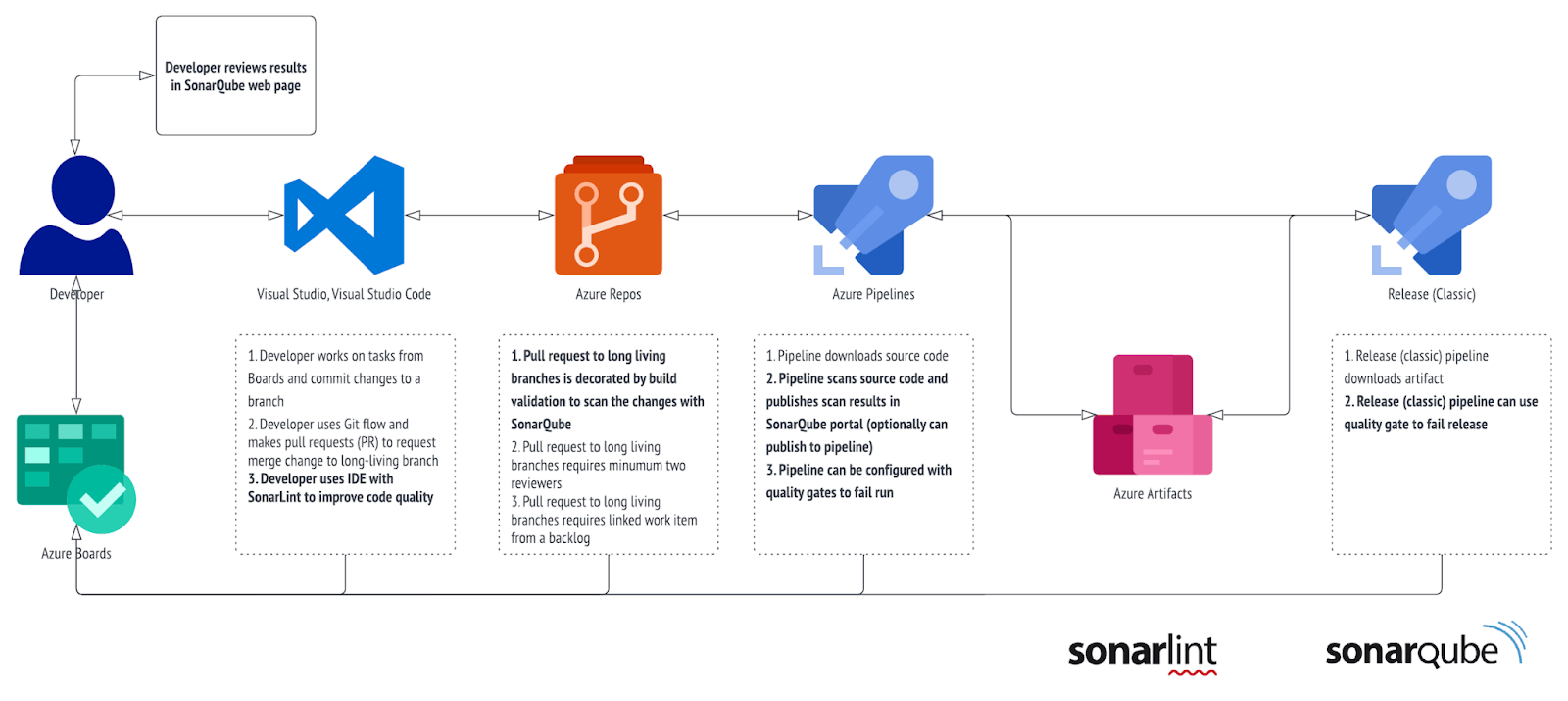

So, we’ve built a self hosted SAST service, and now it’s time to see how it can help developers. While the end goal for us was to implement proper quality gates into the continuous integration process (i.e. we wanted to add optional build validation for PR for most important branches), having community edition (which, by the way, is easy to uplift to any commercial type of license) as our first step helped us to offer scan of code base from the agent using marketplace’s plugin. Basically developers can run the scan and get insights of their code base from SonarQube’s dashboards and detailed reports (they just need to login using their company's identity as we also implemented SAML integration). We’ll skip the configuration and result review part since Microsoft has this workshop that you can follow along.

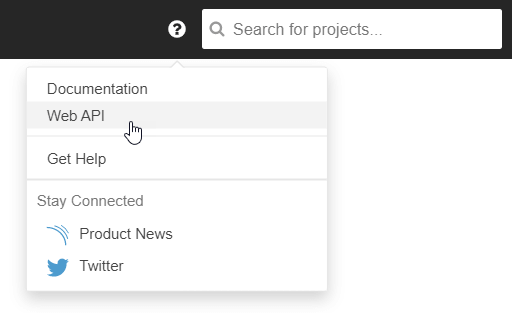

Another interesting part of this project is the onboarding process and adoption. We automated onboarding to SonarQube using its API (documentation is available via SonarQube's GUI) and the API of Azure DevOps:

Web API in SonarQube

There are several endpoints we’ve picked and used for each team:

- Project creation in SonarQube

- Security groups creation (group object in SonarQube has name attribute that we used to store GUID of Azure AD group so we can map it properly based on incoming SAML claims)

- Security groups assignment to the project

- Configuration of DevOps integration (required: PAT token)

- Creation of service connection in Azure DevOps (required: URL and token from SonarQube)

- Creation of sample pipeline definition for scan (with proper project name and service connection)

How to enforce this solution in the most efficient way? As I mentioned at the beginning we encourage shift left approach and utilize security champ programs, security by design. Adding user story with security tasks in each sprint does not hurt and helps to settle security first mindset in engineers; giving tools for better quality and security and integrate them as earlier as possible is another good practice (i.e. production ready branch in source code is too late for implementing SAST, it should be earlier, may be development branch), giving access to developers and train them to use the tools for better security on their machines (i.e. linting, self scanning reusing same rules from SonarQube).

Solution cost

| Service category | Service type | Region | Description | Estimated monthly cost | Estimated upfront cost |

|---|---|---|---|---|---|

| Compute | App Service | West Europe | Basic Tier; 1 B3 (4 Core(s), 7 GB RAM, 10 GB Storage) x 730 Hours; Linux OS; 0 SNI SSL Connections; 0 IP SSL Connections | € 51,21 | € 0,00 |

| Databases | Azure Database for PostgreSQL | West Europe | Flexible Server Deployment, Burstable Tier, 1 B2S (2 vCores) x 730 Hours, 5 GiB Storage, 0 GiB Additional Backup storage - LRS redundancy | € 49,62 | € 0,00 |

| Containers | Azure Container Registry | West Europe | Basic Tier, 1 registries x 30 days, 0 GB Extra Storage, Container Build - 1 CPUs x 1 Seconds - Inter Region transfer type, 5 GB outbound data transfer from West Europe to East Asia | € 4,94 | € 0,00 |

| Networking | Azure DNS | Zone 1, Private; 1 hosted DNS zone, 0 DNS queries | € 0,49 | € 0,00 | |

| Total | € 106,26 | € 0,00 |

Example of calculation (SonarQube on managed Azure services)

This calculation shows monthly cost per development environment, production uses slightly different tiers and costs a bit higher. We chose the tiers based on system requirements and predicted potential load.

Conclusion

Introducing security in DevOps early in the product life cycle might be challenging and makes developers unhappy due to those extra steps in daily routing as well as reconsidering implementation. However it pays back immediately by reducing data breach risk possibility, increasing solution availability and resiliency and ensuring end to end process. Sonar as part of DevSecOps puzzle brings almost 3000 various rules of different types (bug, vulnerability, code smell, security hotspot) out of the box. And embedding it into a continuous integration process brings the developer's experience to a completely new level.

In this article we learned how to provision infrastructure using Azure Bicep with all the recommendations and best practices from Sonar for self hosted static application security testing tools in a cost effective way. We also learnt how to integrate it into Azure DevOps way of working and briefly looked into onboarding processes.

Get in Touch.

Let’s discuss how we can help with your cloud journey. Our experts are standing by to talk about your migration, modernisation, development and skills challenges.