Computer Vision with Edge Computing for Low-latency Real-time Decision Making

Computer vision is a branch of artificial intelligence (AI) that lets computers and other systems get useful information from digital images, videos and other visual inputs and then act on that information or make suggestions based on it. AI lets computers think, while computer vision lets them see, observe and understand what they see [1].

Deep learning is considered an effective way to do many computer vision tasks, such as image classification and object detection. Both deep learning training and inference are computation-intensive in their own ways. On the training side, feeding a large amount of video and images to a DNN is intensive even for a single GPU, and it may require several GPUs to finish the training in the desired time. On the other side, scalability along with minimizing latency issues during the inference process can pose a challenge for getting the system to make decisions in real-time.

Computer Vision with Edge Computing

In computer vision, sending video data to the cloud for inference may cause more delays from the network due to queuing and propagation, and it can't meet the strict end-to-end low-latency requirements that real-time applications need [3]. Sending even a few frames per second to the cloud, would cause a lot of latency including sending data, queueing, storing, processing and sending the results which can violate real-time decision making requirements.

Scalability is still another factor that makes edge computing advantageous for computer vision jobs, as the uplink bandwidth to a cloud server may get congested if there are numerous cameras uploading substantial amounts of video [2].

Additionally, sending camera data to the cloud raises privacy issues, particularly if the video frames contain private documents or sensitive information, which is another motivation to perform computing at the edge.

DL Inference with Edge Computing

In general, there are three different ways that can be considered to use edge computing for DL inference. Choosing these approaches is based on the empirical measurements of the tradeoffs between edge device energy consumption, computation power, input size, size of the model, bandwidths, and maintenance cost [2,3].

- Full model offloading: where the entire DNN computation is offloaded to the edge device. Since the inference, including preprocessing, performs on the edge device, the results are immediately available for any decision-making processes.

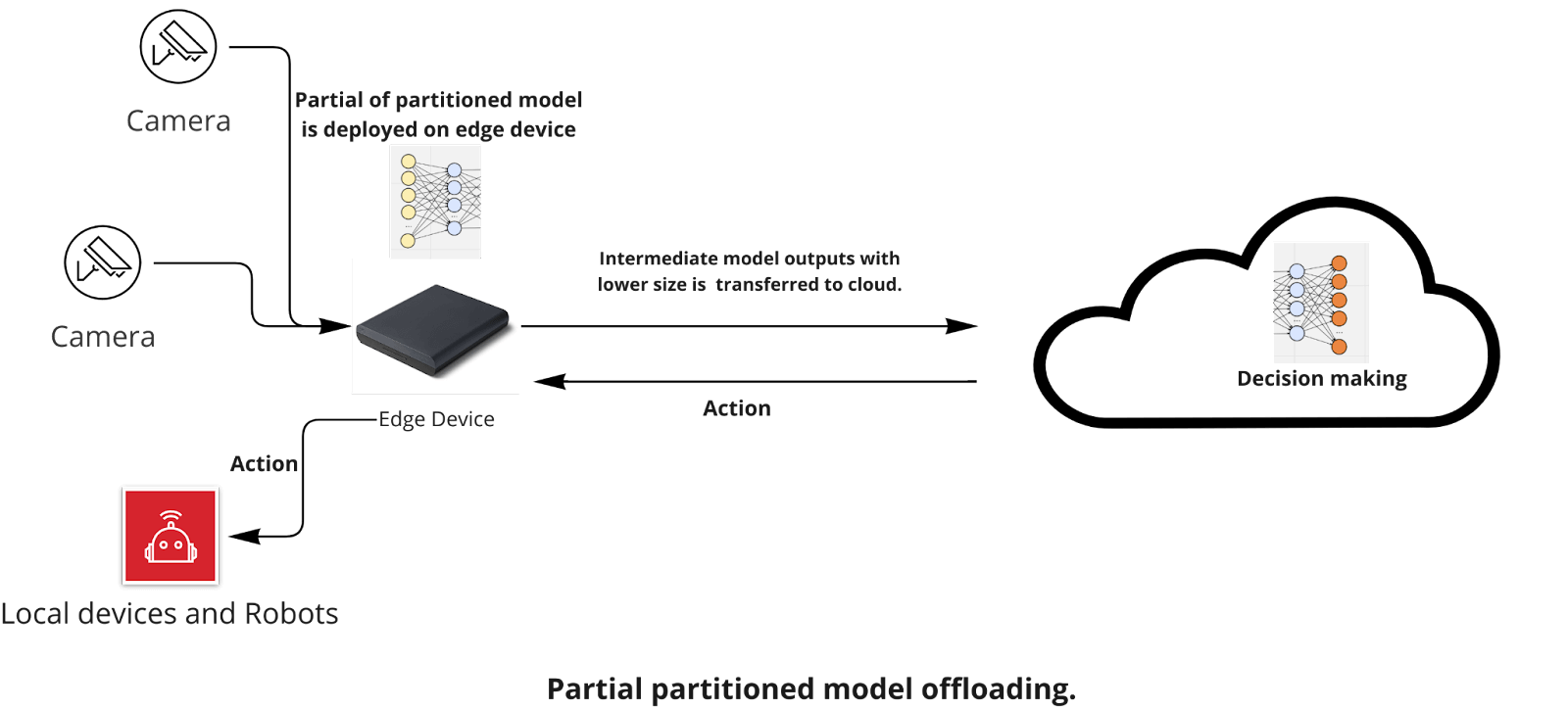

- Partial partitioned model offloading: where the partial parts of the partitioned DNN computations are offloaded;

Model partitioning is based on the premise that once the DNN model's first layers have been computed, the size of the intermediate outputs is relatively small, making them faster to send across the network to the cloud than the raw data [5]. In such model partitioning approaches, some layers are computed on the edge device, and some layers are computed by the cloud. These approaches can potentially reduce latency by leveraging other edge devices' compute cycles. However, it should be considered that the latency of sending the intermediate results to the cloud can add latency to the end-to-end decision-making process [5].

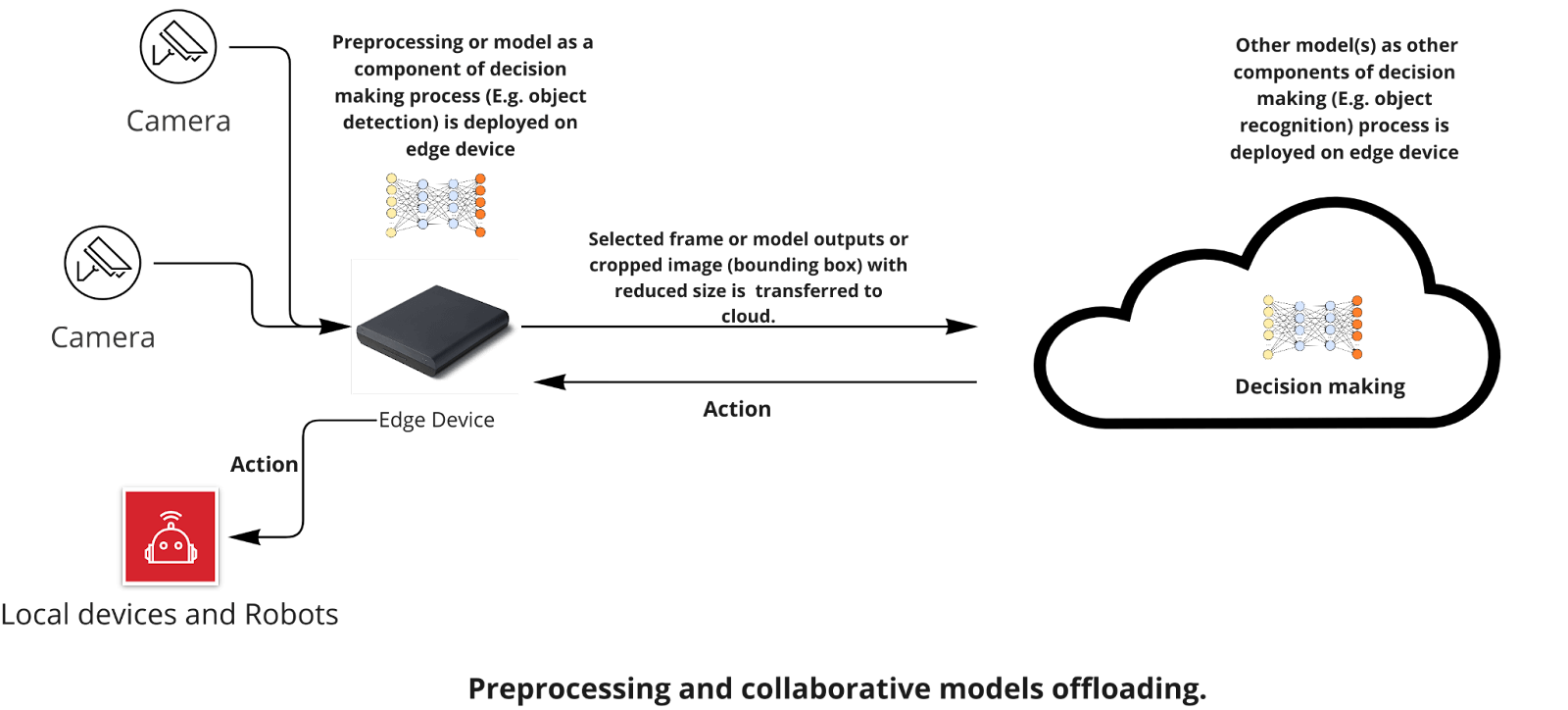

- Preprocessing and collaborative models offloading:

In this approach, different components of a computer vision chain are distributed on the edge and cloud. When several models are working together in a collaborative manner, some models can be run on the edge, and some others can still be on the cloud. For example, for an object recognition task, the edge model can first make a decision to send data to the cloud if the current frame contains any object at all; then, the actual object recognition on cropped and resized data will be carried out on the cloud. The collaborative models can be divided between edge and cloud based on device computing power and application constraints.

Fish Agriculture Automation

As in many other production industries, automation in fish processing has focused on eliminating dangerous, difficult, and repetitive tasks. For example, automating the trimming and sorting of defected fish requires advanced technology. Defects in fish can be of natural causes, such as parasites, melanin spots, and disease, or through the shipping or in the processing hall. In order to automate the trimming or sorting processes, a machine would need to identify defects, determine their severity, and determine trimming, sorting or treatment actions based on the defect. Real-time decision-making is very crucial to perform appropriate action in a fraction of a second.

Weed Detection

Herbicides, mechanized weeding, and manual weeding are some of the conventional weeding techniques that farmers have traditionally used. There are difficulties with any weed control strategy. Fully automated agricultural robots have been equipped with AI and machine learning to quickly identify weeds and take the necessary action to consistently and sustainably get rid of them. When weeds are found, the self-driving robot should make a decision in real-time on whether to gently drop a precise quantity of pesticide directly onto the weed or use lasers to eliminate it.

Health, Safety and Environment (HSE)

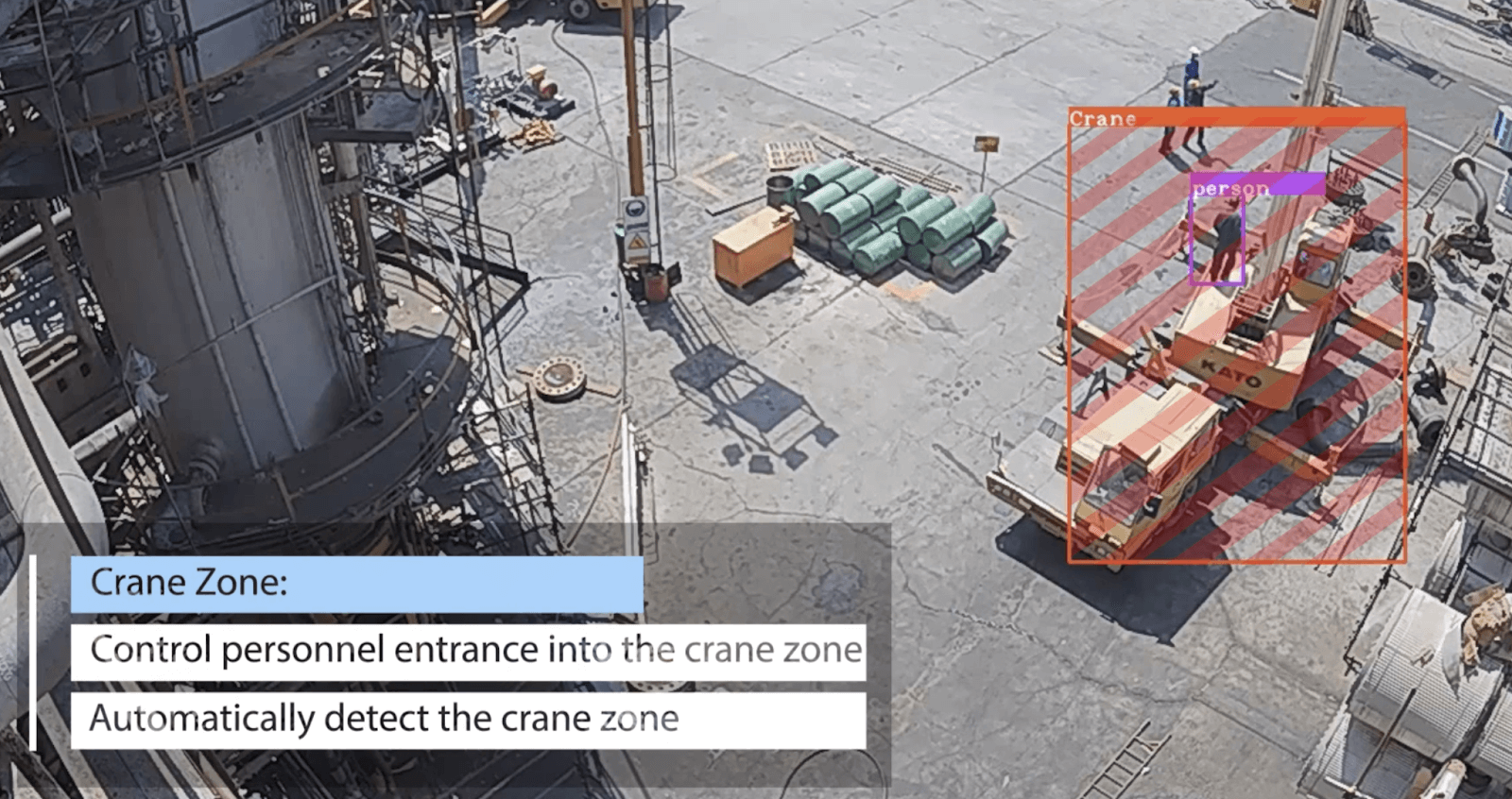

HSE systems and processes create a healthy and safe environment for all stakeholders preventing incidents such as injuries, illnesses, and harmful environments. AI and computer vision are behind the magic that is specifically designed to cover health and safety concerns in real-world situations.

Numerous safety scenarios for various industries, including Oil and Gas, manufacturing, warehousing, and even Public Places, can be considered. For example, the model can identify personal protective equipment (PPE), including helmets, shoes, gloves, and industrial goggles, or detect heavy and light vehicles along with cranes and work zones to ensure their safety.

AWS Panorama

Edge computing enables you to fully utilize the massive amounts of untapped data that are generated by connected devices. You may find new business possibilities, improve operational effectiveness, and give your consumers faster, more consistent experiences. By evaluating data locally, the best edge computing models can aid in performance acceleration.

However, there are difficulties in the procedure of edge computing as well. Network security issues, administrative challenges, and latency and bandwidth constraints should all be considered by an effective edge computing paradigm. A good model should enable you to [4]:

- Manage your workloads across all cloud and on any number of devices

- Deploy applications to all edge locations reliably and seamlessly

- Maintain openness and flexibility to adapt to evolving needs

- Operate securely and with confidence

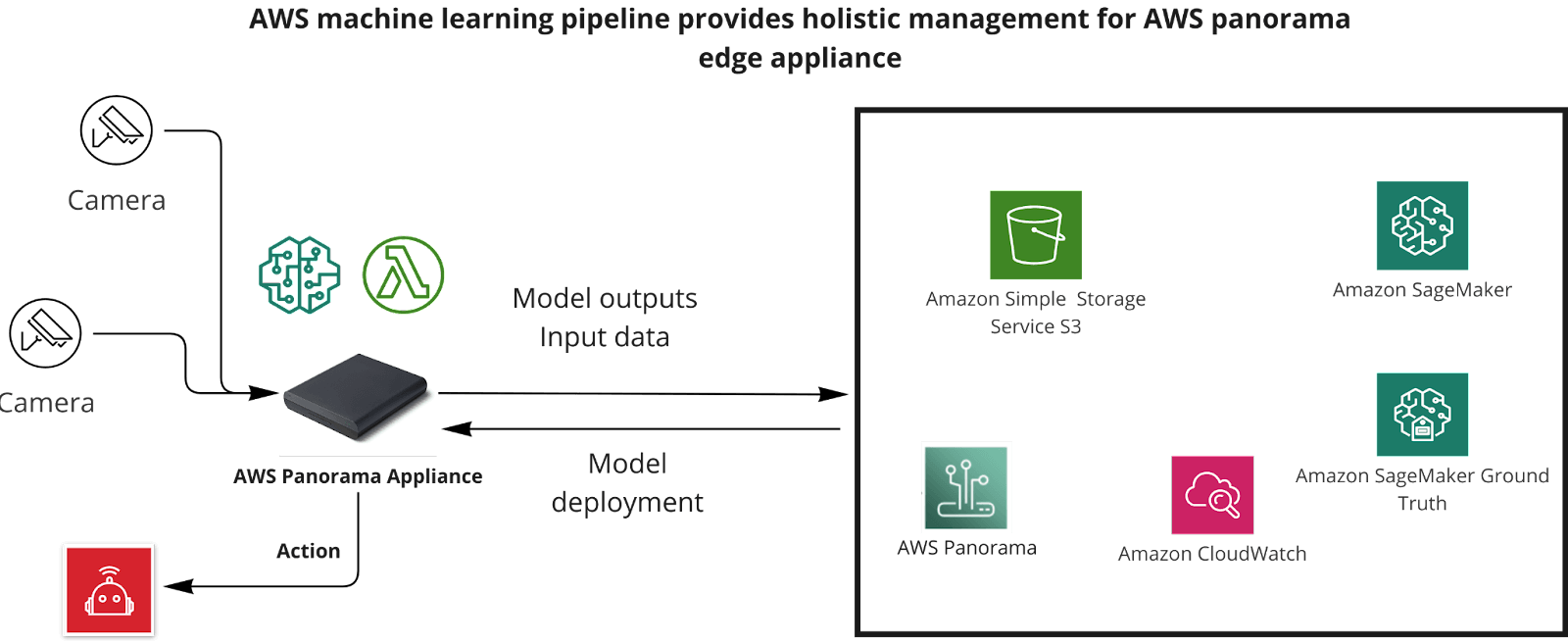

AWS panorama [7] adds computer vision (CV) to your existing fleet of cameras, which integrate seamlessly with your local area network. It makes predictions locally with high accuracy and low latency from a single management interface, where you can analyze video feeds in milliseconds. You can process video feeds at the edge, so you can control where your data is stored and operate with limited internet bandwidth. AWS panorama is fully integrated with other AWS services to provide secure operations and management while facilitating and spreading the machine learning pipeline to the edge reliably and seamlessly.

By AWS services, the model can be trained on the cloud and then deployed on the edge device. Thanks to a full machine learning pipeline on AWS, the model can be retrained by active learning using AWS SageMaker ground truth and monitored to detect data and model drift even when the model is deployed on edge. There are many AWS services that can maximize business values like IoT Greengrass, Snow family, SageMaker, Panorama and etc. by employing edge computing.

Nordcloud

Our team at Nordcloud has vast expertise in the creation of integrated solutions on cloud, and edge computing that solve a variety of distinct practical challenges that are presented by AI-based projects. Cross-skilled teams at Nordcloud, which include data scientists, cloud architects, and developers, offer reliable solutions and implementation for full machine learning pipelines (data preparation, training, model deployment, and model/data drift monitoring). These solutions and implementations take into account cloud, and edge resources, as well as advanced machine learning issues and methods such as scarce training datasets, active learning, supervised/semi-supervised/unsupervised learning, and model explainability.

References

[1] IBM Computer vision, “What is computer vision?” https://www.ibm.com/topics/computer-vision

[2] Askar, Shavan & Jameel, Zhala & Kareem, Shahab. (2021). “Deep Learning and Fog Computing: A Review.” 10.5281/zenodo.5222647.

[3] Chen, Jiasi & Ran, Xukan. (2019). “Deep Learning With Edge Computing: A Review.” Proceedings of the IEEE. PP. 1-20. 10.1109/JPROC.2019.2921977.

[4] IBM Cloud, “What is edge computing?”, https://www.ibm.com/cloud/what-is-edge-computing

[5] Z. Zhao, K. M. Barijough and A. Gerstlauer, "DeepThings: Distributed Adaptive Deep Learning Inference on Resource-Constrained IoT Edge Clusters," in IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, vol. 37, no. 11, pp. 2348-2359, Nov. 2018, doi: 10.1109/TCAD.2018.2858384.

[6] Ren, S., He, K., Girshick, R., & Sun, J. (2015). Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in neural information processing systems, 28.

[7] AWS Panorama, AWS Panorama, Improve your operations with computer vision at the edge, https://aws.amazon.com/panorama/

Get in Touch.

Let’s discuss how we can help with your cloud journey. Our experts are standing by to talk about your migration, modernisation, development and skills challenges.