Look ma, I created a home IoT setup with AWS, Raspberry Pi, Telegram and RuuviTags

Hobby projects are a fun way to try and learn new things. This time, I decided to build a simple IoT setup for home, to collect and visualise information like temperature, humidity and pressure. While learning by doing was definitely one of the reasons I decided to embark the project, I for example wanted to control the radiators located in the attic: Not necessarily by switching power on/off, but getting alarms if I’m heating it too much or little, so that I can tune the power manually. Saving some money, in practice. Also, it is nice the get reminders from humidor that the cigars are getting dried out 😉

I personally learned several things while working on it, and via this blog post, hopefully you can too!

Overview

Idea of the project is relatively simple: Place a few RuuviTag -sensors around the house, collect the data and push it into AWS cloud for permanent storage and additional processing. From there, several solutions can be built around the data, visualisation and alarms being being only few of them.

Solution is built using AWS serverless technologies that keeps the running expenses low while requiring almost non-existing maintenance. Following code samples are only snippets from the complete solution, but I’ve tried to collect the relevant parts.

Collect data with RuuviTags and Raspberry Pi

Tag sensors broadcasts their data (humidity, temperature, pressure etc.) via Bluetooth LE periodically. Because Ruuvi is an open source friendly product, there are already several ready-made solutions and libraries to utilise. I went with node-ruuvitag, which is a Node.js module (Note: I found that module works best with Linux and Node 8.x but you may be successful with other combinations, too).

Raspberry Pi runs a small Node.js application that both listens the incoming messages from RuuviTags and forwards them into AWS IoT service. App communicates with AWS cloud using thingShadow client, found in AWS IoT Device SDK module. Application authenticates using X.509 certificates generated by you or AWS IoT Core.

The scripts runs as a Linux service. While tags broadcast data every second or so, the app in Raspberry Pi forwards the data only once in 10 minutes for each tag, which is more than sufficient for the purpose. This is also an easy way to keep processing and storing costs very low in AWS.

When building an IoT or big data solution, one may initially aim for near real-time data transfers and high data resolutions while the solution built on top of it may not really require it. Alternatively, consider sending data in batches once an hour and with 10 minute resolution may be sufficient and is also cheaper to execute.

When running the broadcast listening script in Raspberry Pi, there are couple things to consider:

- All the tags may not appear at first reading: (Re)run ruuvi.findTags() every 30mins or so, to ensure all the tags get collected

- Raspberry Pi can drop from WLAN: Setup a script to automatically reconnect in a case that happens

With these in place, the setup have been working without issues, so far.

Process data in AWS using IoT Core and friends

AWS processing overview[/caption]

AWS processing overview[/caption]

Once the data hits the AWS IoT Core there can be several rules for handling the incoming data. In this case, I setup a lambda to be triggered for each message. AWS IoT provides also a way to do the DynamoDB inserts directly from the messages, but I found it more versatile and development friendly approach to use the lambda between, instead.

AWS IoT Core act rule[/caption]

AWS IoT Core act rule[/caption]

DynamoDB works well as permanent storage in this case: Data structure is simple and service provides on demand based scalability and billing. Just pay attention when designing the table structure and make sure it fits with you use cases as changes done afterwards may be laborious. For more information about the topic, I recommend you to watch a talk about Advanced Design Patterns for DynamoDB.

DynamoDB structure I end up using[/caption]

DynamoDB structure I end up using[/caption]

Visualise data with React and Highcharts

Once we have the data stored in semi structured format in AWS cloud, it can be visualised or processed further. I set up a periodic lambda to retrieve the data from DynamoDB and generate CSV files into public S3 bucket, for React clients to pick up. CSV format was preferred over for example JSON to decrease the file size. At some point, I may also try out using the Parquet -format and see if it suits even better for the purpose.

Overview visualisations for each tag[/caption]

Overview visualisations for each tag[/caption]

The React application fetches the CSV file from S3 using custom hook and passes it to Highcharts -component.

During my professional career, I’ve learnt the data visualisations are often causing various challenges due to limitations and/or bugs with the implementation. After using several chart components, I personally prefer using Highcharts over other libraries, if possible.

Snapshot from the tag placed outside[/caption]

Snapshot from the tag placed outside[/caption]

Send notifications with Telegram bots

Visualisations works well to see the status and how the values vary by the time. However, in case something drastic happens, like humidor humidity gets below preferred level, I’d like to get an immediate notification about it. This can be done for example using Telegram bots:

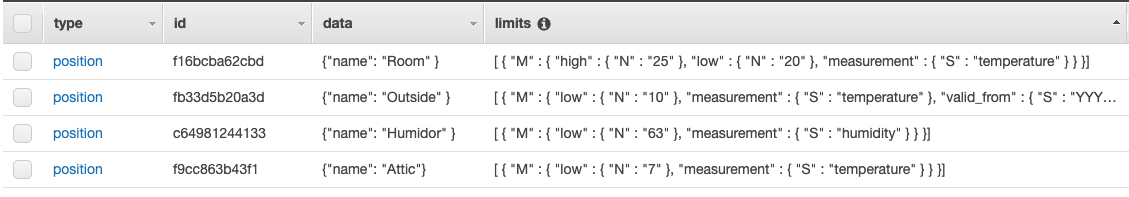

- Define the limits for each tag for example into DynamoDB table

- Compare limits with actual measurement whenever data arrives in custom lambda

- If value exceeds the limit, trigger SNS message (so that we can subscribe several actions to it)

- Listen into SNS topic and send Telegram message to message group you’re participating in

- Profit!

Limits in DynamoDB[/caption]

Limits in DynamoDB[/caption]

Summary

By now, you should have some kind of understanding how one can combine IoT sensor, AWS services and outputs like web apps and Telegram nicely together using serverless technologies. If you’ve built something similar or taken very different approach, I’d be happy hear it!

Price tag

Building and running your own IoT solution using RuuviTags, Raspberry Pi and AWS Cloud does not require big investments. Here are some approximate expenses from the setup:

- 3-pack of RuuviTags: 90e (ok, I wish these were a little bit cheaper so I’d buy these more since the product is nice)

- Raspberry Pi with accessories: 50e

- Energy used by RPi: http://www.pidramble.com/wiki/benchmarks/power-consumption

- Lambda executions: $0,3/month

- SNS notifications: $0,01/month

- S3 storage: $0,01/month

- DynamoDB: $0,01/month

And after looking into numbers, there are several places to optimise as well. For example, some lambdas are executed more often than really needed.

Next steps

I’m happy say this hobby project has achieved that certain level of readiness, where it is running smoothly days through and being valuable for me. As a next steps, I’m planning to add some kind of time range selection. As the amount of data is increasing, it will be interesting to see how values vary in long term. Also, it would be a good exercise to integrate some additional AWS services, detect drastic changes or communication failures between device and cloud when they happen. This or that, at least now I have a good base for continue from here or build something totally different next time 🙂

References, credits and derivative work

This project is no by means a snowflake and has been inspired by existing projects and work:

- Node.js module receiving RuuviTag messages: https://www.npmjs.com/package/node-ruuvitag

- Blog post: A Telegram Bot to retrieve data from RuuviTag https://medium.com/@france193/ruuvitag-telegram-bot-48bd03a61f3c

- Illustrations are adapted from icons sets created by https://www.flaticon.com/authors/freepik

For more content follow Juha and Nordcloud Engineering on Medium.

At Nordcloud we are always looking for talented people. If you enjoy reading this post and would like to work with public cloud projects on a daily basis — check out our open positions here.

Get in Touch.

Let’s discuss how we can help with your cloud journey. Our experts are standing by to talk about your migration, modernisation, development and skills challenges.